Yioop Documentation v9.5 (under revision for 9.3)

Overview

Getting Started

If you have downloaded a prior version of the Yioop software, you may prefer to use one of the following PDF captures of the software documentation:- Version 8.0.0: Version 8.0.0 Documentation-PDF

- Version 7.1.4: Version 7.1.4 Documentation-PDF

- Version 6.0.4: Version 6.0.4 Documentation-PDF

- Version 5.0.4: Version 5.0.4 Documentation-PDF

- Version 4.0.1: Version 4.0 Documentation-PDF

- Version 3.20: Version 3.20 Documentation-PDF

- Version 2.10: Version 2.10 Documentation-PDF

This document serves as a detailed reference for the Yioop search engine. If you want to get started using Yioop now, you probably want to first read the Installation Guides page and look at the Yioop Video Tutorials Wiki. If you cannot find your particular machine configuration there, you can check the Yioop Requirements section followed by the more general Installation and Configuration instructions.

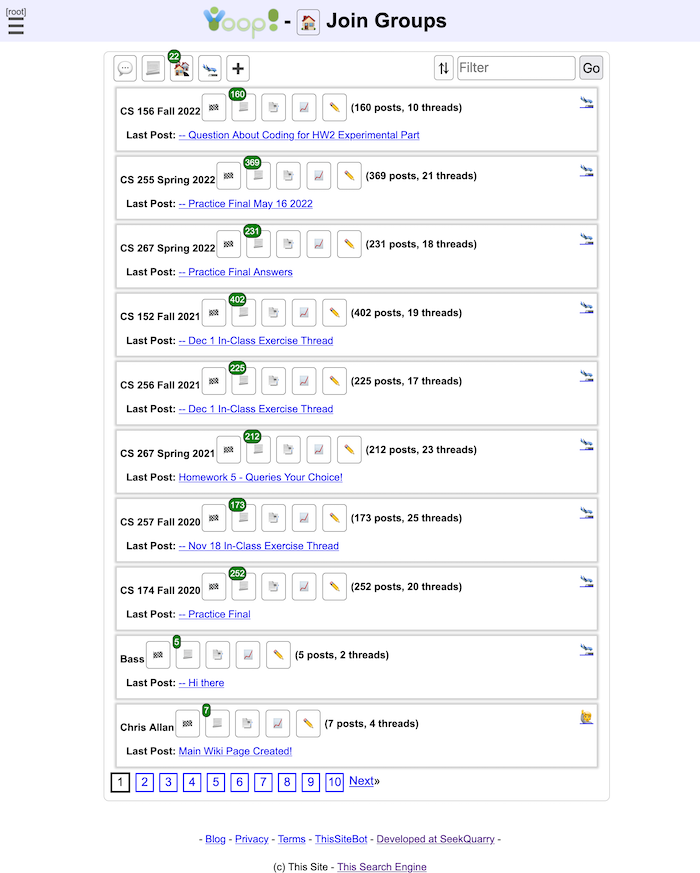

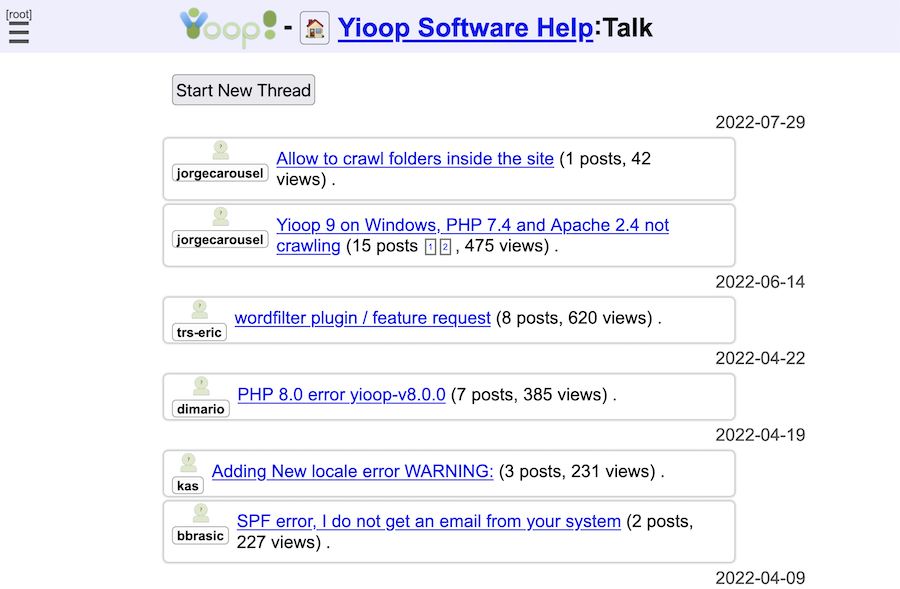

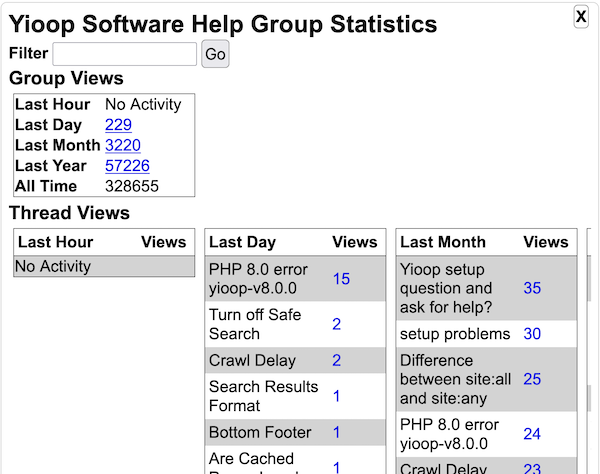

Yioop.com , the demo site for Yioop software, allows people to register accounts. Once registered, if you have questions about Yioop and its installation, you can join the

Yioop Software Help group and post your questions there. This group is frequently checked by the creators of Yioop, and you will likely get a quick response.

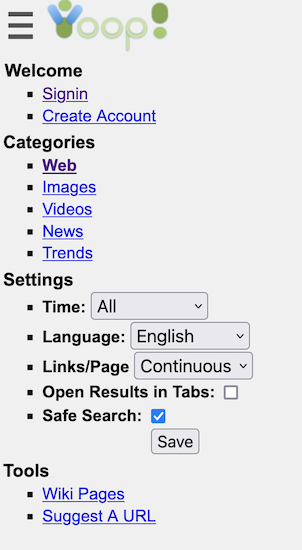

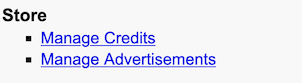

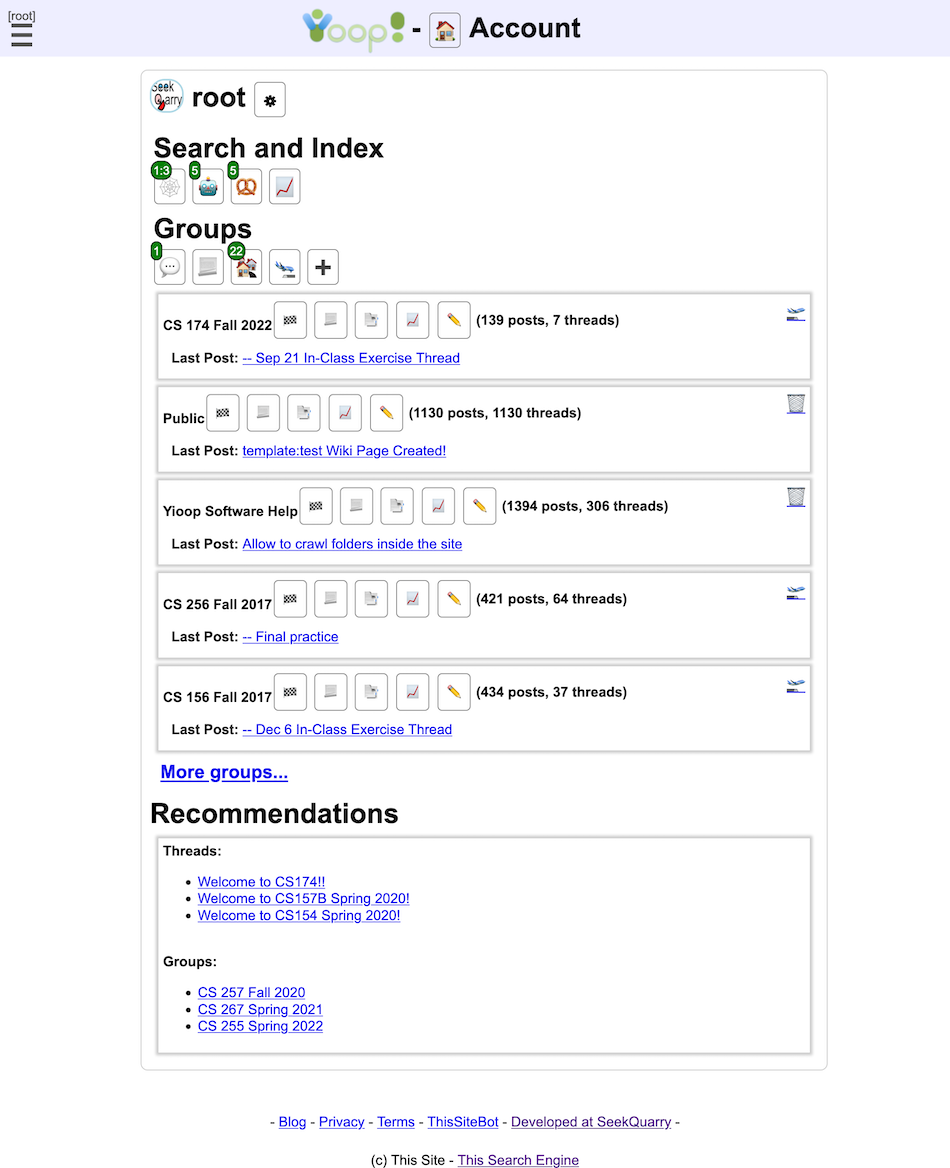

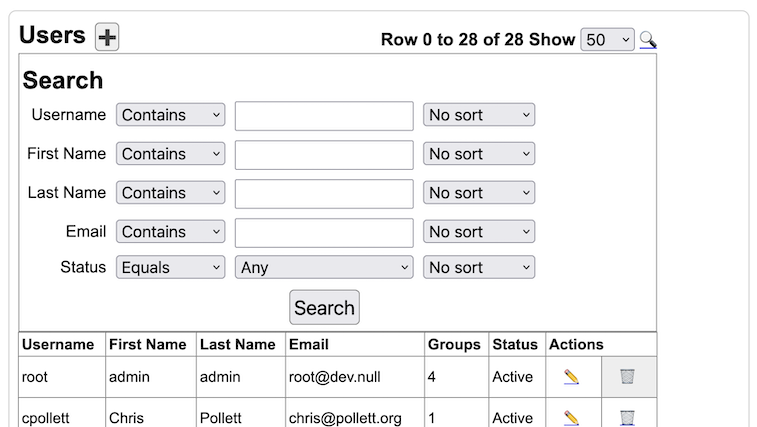

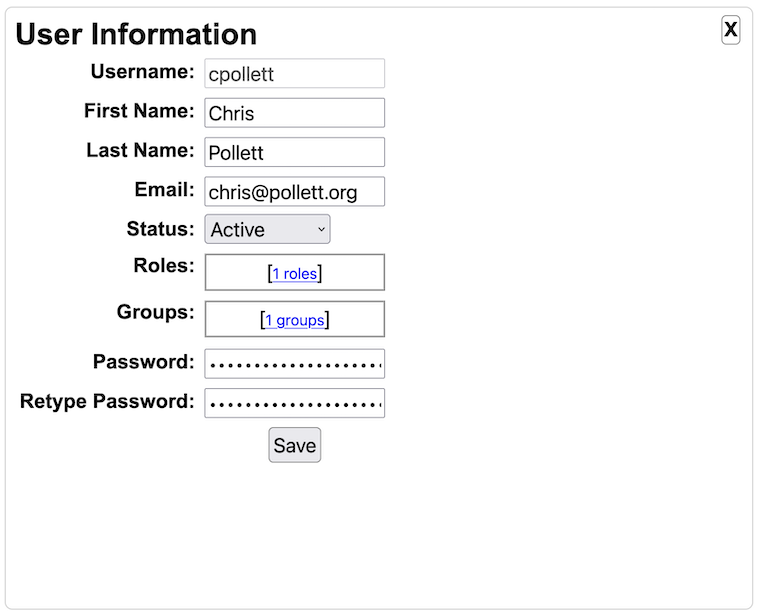

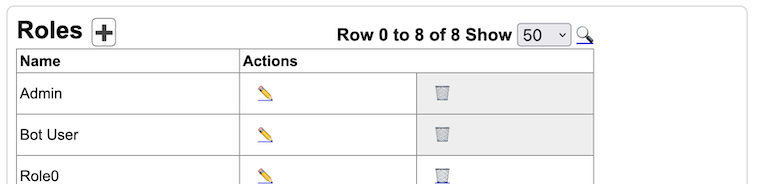

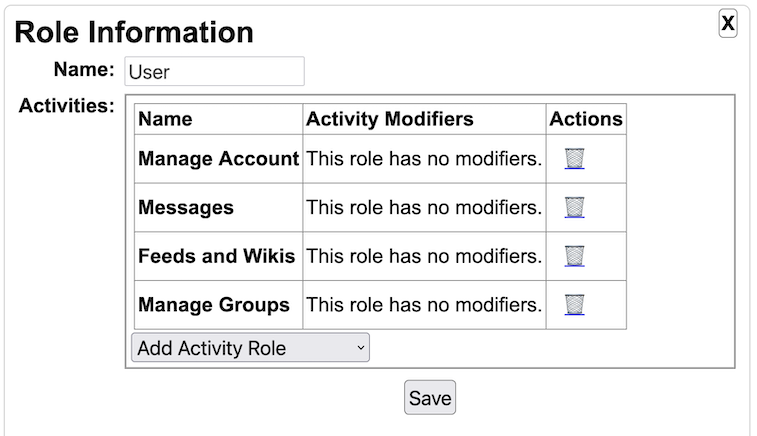

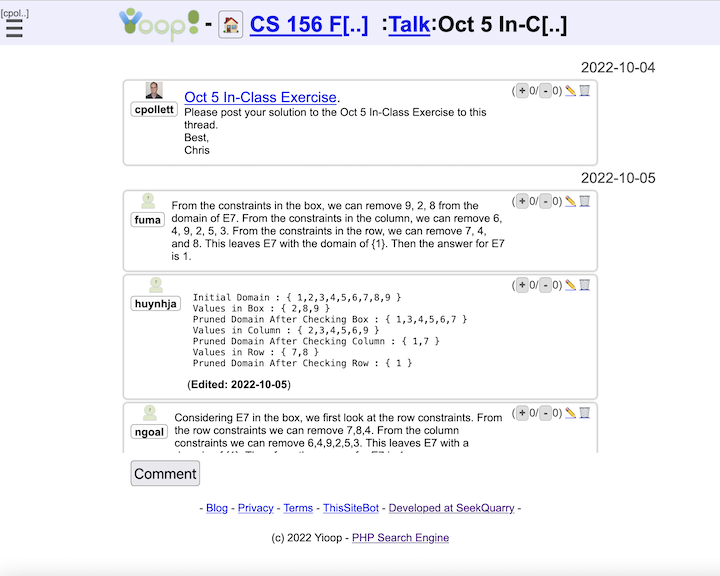

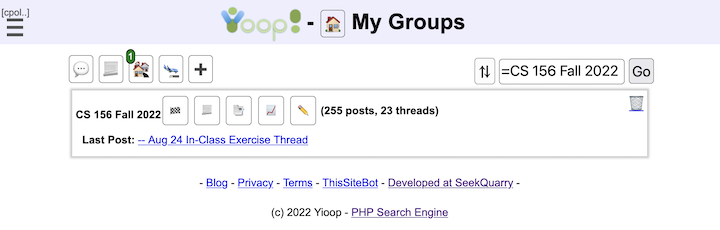

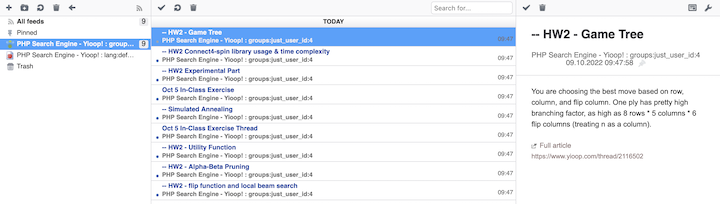

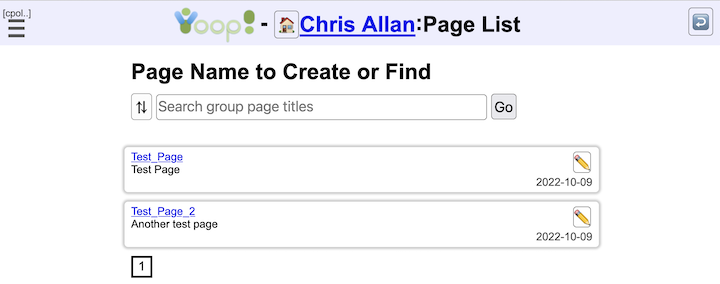

Having a Yioop account also allows you to experiment with some of the features of Yioop beyond search such as Yioop Groups and Wikis without needing to install the software yourself. The Search and User Interface , Managing Users, Roles, and Groups , and Feeds and Wikis sections below could serve as a guide to testing the portion of the site general users have access to on Yioop.com.

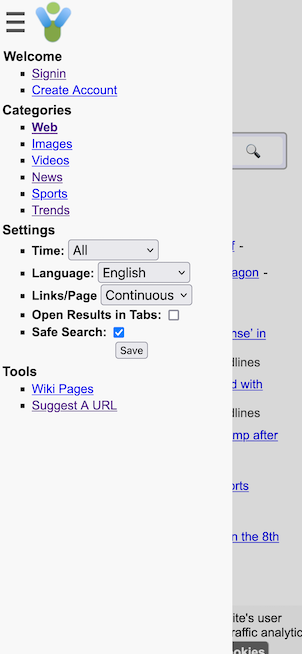

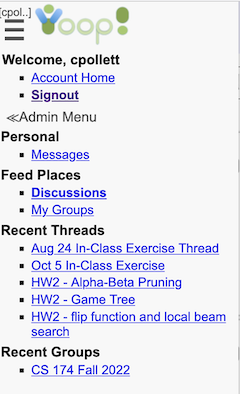

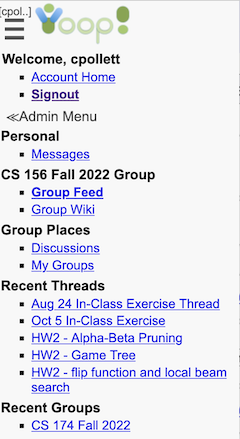

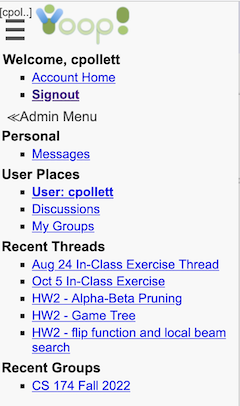

Activities in Yioop can be found by clicking on the hamburger menu icon

. The contents of the menu revealed by clicking on this icon change depending on one of four contexts: Search Web context, Account Admin context, Group Discussion context, and Group Wiki Context. The Account Home

icon

. The contents of the menu revealed by clicking on this icon change depending on one of four contexts: Search Web context, Account Admin context, Group Discussion context, and Group Wiki Context. The Account Home

icon

can be used on many pages to return you to the page when you just sign in to your account.

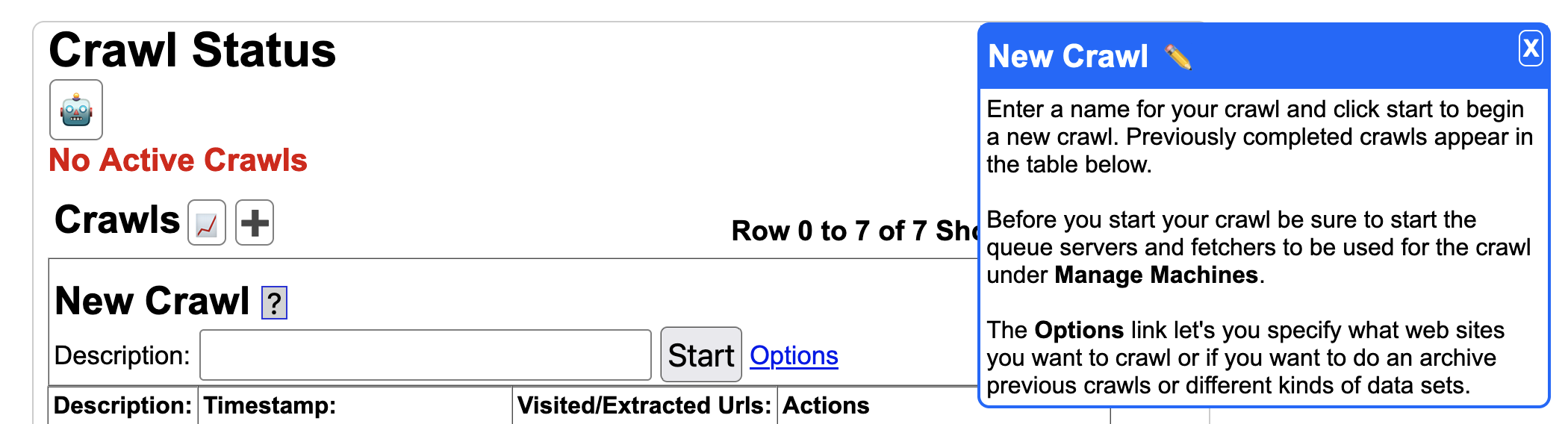

When using Yioop software, if you do not understand a feature, make sure to also check out the integrated help system throughout Yioop. Clicking on a question mark icon will reveal an additional blue column on a page with help information as seen below:

can be used on many pages to return you to the page when you just sign in to your account.

When using Yioop software, if you do not understand a feature, make sure to also check out the integrated help system throughout Yioop. Clicking on a question mark icon will reveal an additional blue column on a page with help information as seen below:

. The contents of the menu revealed by clicking on this icon change depending on one of four contexts: Search Web context, Account Admin context, Group Discussion context, and Group Wiki Context. The Account Home

icon

. The contents of the menu revealed by clicking on this icon change depending on one of four contexts: Search Web context, Account Admin context, Group Discussion context, and Group Wiki Context. The Account Home

icon

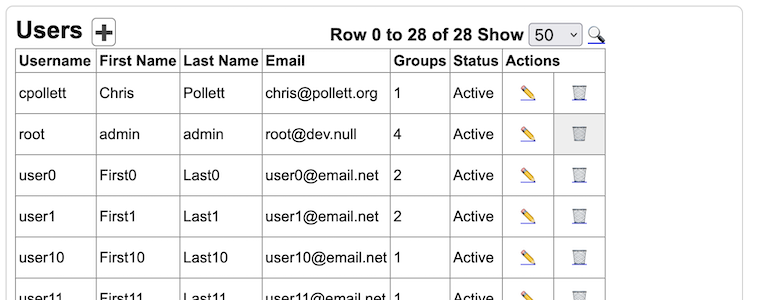

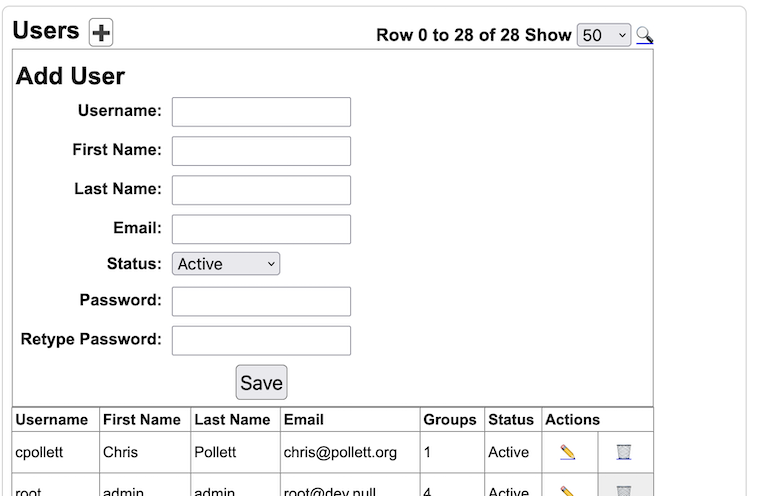

Most Yioop activities share similar interface features. Namely, the Add Icon

is used to add the main entity controlled by an activity such as a User, Group, Role, Web Crawl, Web Scraper, etc. To open up a search form to search for a particular entity controlled by an activity one clicks on the search icon

is used to add the main entity controlled by an activity such as a User, Group, Role, Web Crawl, Web Scraper, etc. To open up a search form to search for a particular entity controlled by an activity one clicks on the search icon

.

The Icon Glossary has a list of the common icons used in Yioop.

.

The Icon Glossary has a list of the common icons used in Yioop.

Introduction

The Yioop search engine is designed to allow users to produce indexes of a web-site or a collection of web-sites. The number of pages a Yioop index can handle range from small sites to sites containing tens or hundreds of millions of pages. The largest index so-far created using Yioop is slightly over a billion pages. In contrast, a search-engine like Google maintains an index of tens of billions of pages. Nevertheless, since you, the user, have control over the exact sites which are being indexed with Yioop, you have much better control over the kinds of results that a search will return. Yioop provides a traditional web interface to do queries, an rss api, and a function api. It also supports many common features of a search portal such as user discussion group, blogs, wikis, and a news and trends aggregator. In this section we discuss some of the different search engine technologies which exist today, how Yioop fits into this eco-system, and when Yioop might be the right choice for your search engine needs. In the remainder of this document after the introduction, we discuss how to get and install Yioop; the files and folders used in Yioop; the various crawl, search, social portal, and administration facilities in the Yioop; localization in the Yioop system; building a site using the Yioop framework; embedding Yioop in an existing web-site; customizing Yioop; and the Yioop command-line tools.

Since the mid-1990s a wide variety of search engine technologies have been explored. Understanding some of this history is useful in understanding Yioop capabilities. In 1994, Web Crawler, one of the earliest still widely-known search engines, only had an index of about 50,000 pages which was stored in an Oracle database. Today, databases are still used to create indexes for small to medium size sites. An example of such a search engine written in PHP is Sphider. Given that a database is being used, one common way to associate a word with a document is to use a table with a columns like word id, document id, score. Even if one is only extracting about a hundred unique words per page, this table's size would need to be in the hundreds of millions for even a million page index. This edges towards the limits of the capabilities of database systems although techniques like table sharding can help to some degree. The Yioop engine uses a database to manage some things like users and roles, but uses its own web archive format and indexing technologies to handle crawl data. This is one of the reasons that Yioop can scale to larger indexes.

When a site that is being indexed consists of dynamic pages rather than the largely static page situation considered above, and those dynamic pages get most of their text content from a table column or columns, different search index approaches are often used. Many database management systems like MySQL/MariaDB, support the ability to create full text indexes for text columns. A faster more robust approach is to use a stand-alone full text index server such as Sphinx. However, for these approaches to work the text you are indexing needs to be in a database column or columns, or have an easy to define "XML mapping". Nevertheless, these approaches illustrate another common thread in the development of search systems: Search as an appliance, where you either have a separate search server and access it through either a web-based API or through function calls.

Yioop has both a search function API as well as a web API that can return Open Search RSS results or a JSON variant. These can be used to embed Yioop within your existing site. If you want to create a new search engine site, Yioop

provides all the basic features of web search portal. It has its own account management system with the ability to set up groups that have both discussions boards and wikis with various levels of access control. The built in Public group's wiki together with the GUI configure page can be used to completely customize the look and feel of Yioop. Third party display ads can also be added through the GUI interface. If you want further customization, Yioop

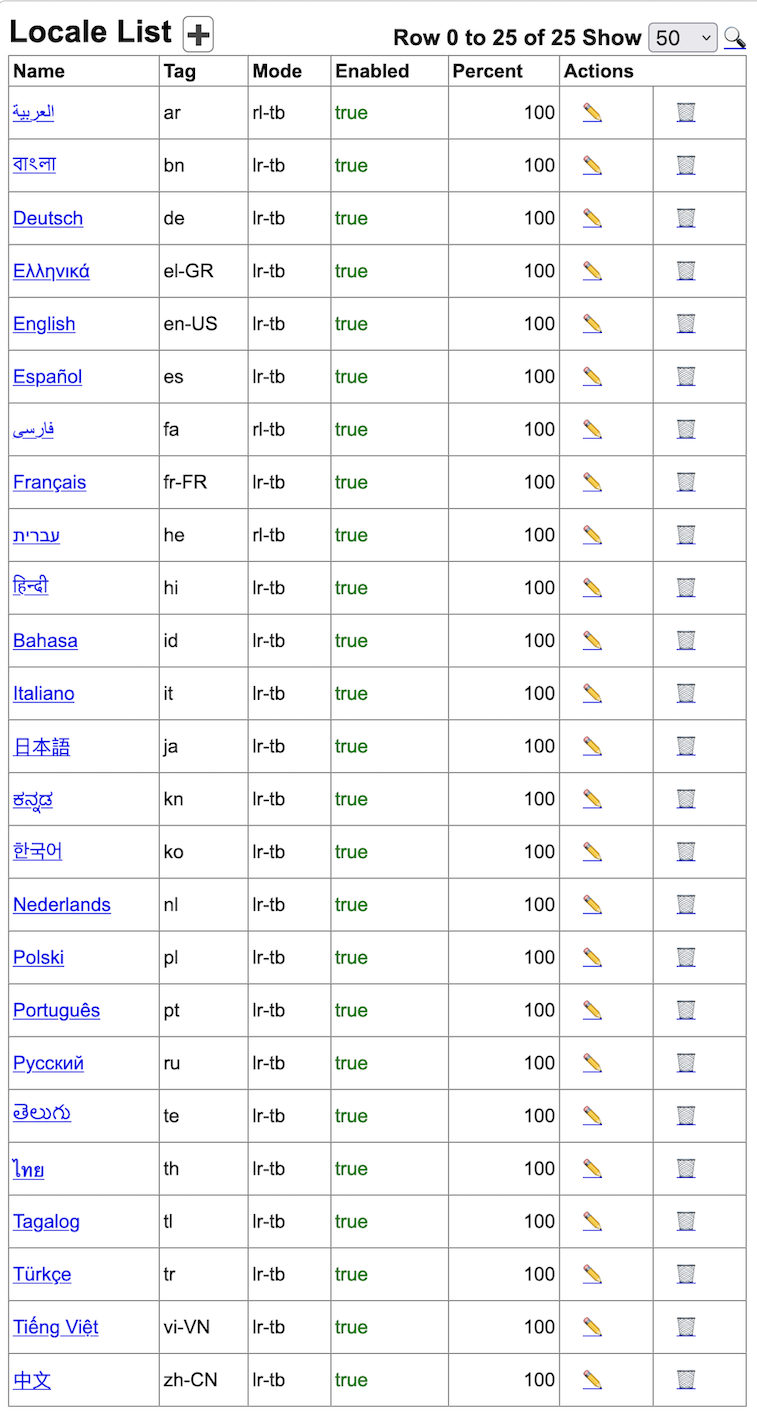

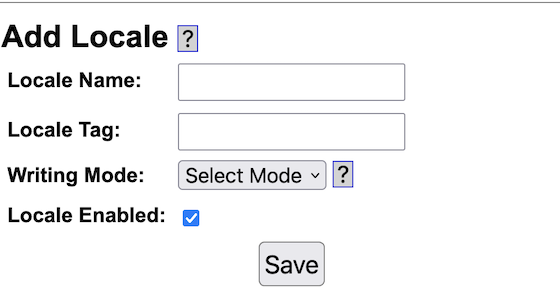

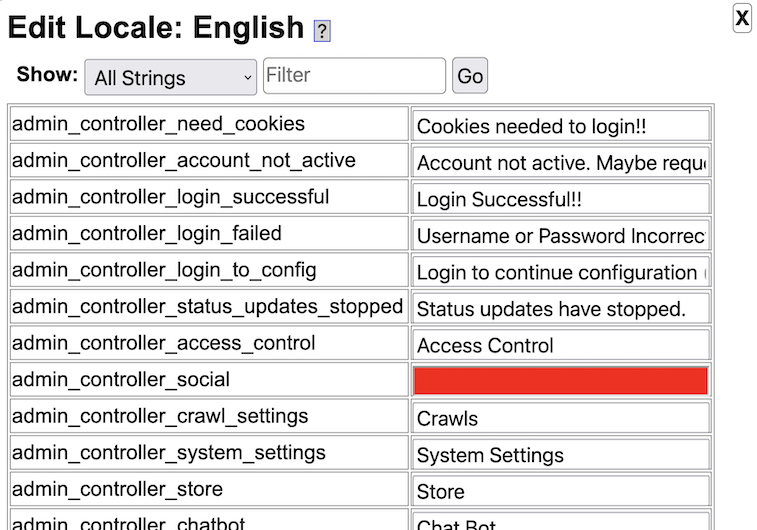

offers a web-based, model-view-adapter (a variation on model-view-controller) framework with a web-interface for localization.

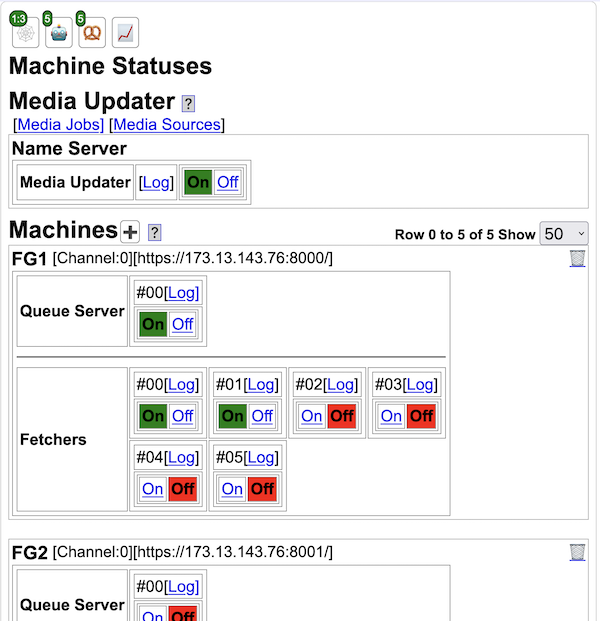

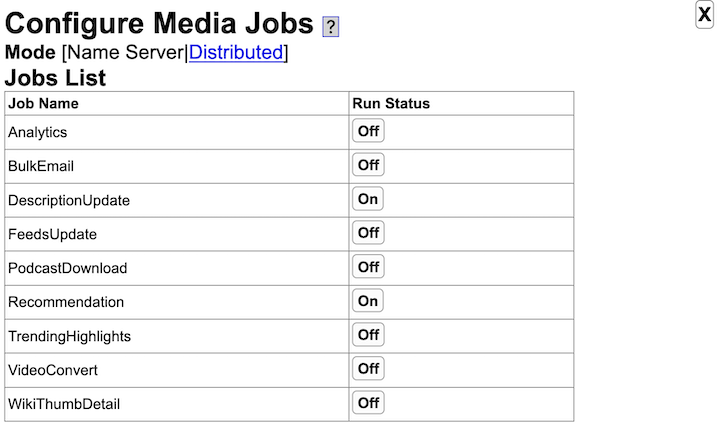

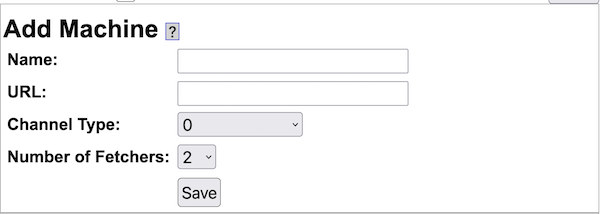

By 1997 commercial sites like Inktomi and AltaVista already had tens or hundreds of millions of pages in their indexes [ P1994 ] [ P1997a ] [ P1997b ]. Google [ BP1998 ] circa 1998 in comparison had an index of about 25 million pages. These systems used many machines each working on parts of the search engine problem. On each machine there would, in addition, be several search related processes, and for crawling, hundreds of simultaneous threads would be active to manage open connections to remote machines. Without threading, downloading millions of pages would be very slow. Yioop is written in PHP. This language is the `P' in the very popular LAMP web platform. This is one of the reasons PHP was chosen as the language of Yioop. Unfortunately, PHP does not have built-in threads. However, the PHP language does have a multi-curl library (implemented in C) which uses threading to support many simultaneous page downloads. This is what Yioop uses. Like these early systems Yioop also supports the ability to distribute the task of downloading web pages to several machines. As the problem of managing many machines becomes more difficult as the number of machines grows, Yioop further has a web interface for turning on and off the processes related to crawling on remote machines managed by Yioop.

There are several aspects of a search engine besides downloading web pages that benefit from a distributed computational model. One of the reasons Google was able to produce high quality results was that it was able to accurately rank the importance of web pages. The computation of this page rank involves repeatedly applying Google's normalized variant of the web adjacency matrix to an initial guess of the page ranks. This problem naturally decomposes into rounds. Within a round the Google matrix is applied to the current page ranks estimates of a set of sites. This operation is reasonably easy to distribute to many machines. Computing how relevant a word is to a document is another task that benefits from multi-round, distributed computation. When a document is processed by indexers on multiple machines, words are extracted and a stemming algorithm such as [ P1980 ] or a character n-gramming technique might be employed (a stemmer would extract the word jump from words such as jumps, jumping, etc; converting jumping to 3-grams would make terms of length 3, i.e., jum, ump, mpi, pin, ing). For some languages like Chinese, where spaces between words are not always used, a segmenting algorithm like reverse maximal match might be used. Next a statistic such as BM25F [ ZCTSR2004 ] or DFR [ AvR2002 ] (or at least their non-query time components) is computed to determine the importance of that word in that document compared to that word amongst all other documents. To do this calculation one needs to compute global statistics concerning all documents seen, such as their average-length, how often a term appears in a document, etc. If the crawling is distributed it might take one or more merge rounds to compute these statistics based on partial computations on many machines. Hence, each of these computations benefit from allowing distributed computation to be multi-round. Infrastructure such as the Google File System [ GGL2003 ], the MapReduce model [ DG2004 ], and the Sawzall language [ PDGQ2006 ] were built to make these multi-round distributed computation tasks easier. In the open source community, the Hadoop Distributed File System and Hadoop MapReduce play an analogous role [ W2015 ]. More recently, a theoretical framework for what algorithms can be carried out as rounds of map inputs to sequence of key value pairs, shuffle pairs with same keys to the same nodes, reduce key-value pairs at each node by some computation has begun to be developed [ KSV2010 ]. This framework shows the map reduce model is capable of solving quite general cloud computing problems -- more than is needed just to deploy a search engine.

Infrastructure such as this is not trivial for a small-scale business or individual to deploy. On the other hand, most small businesses and homes do have available several machines not all of whose computational abilities are being fully exploited. Also, it is relatively cheap to rent multiple machines in a cloud service. So the capability to do distributed crawling and indexing in this setting exists. Further high-speed internet for homes and small businesses is steadily getting better. Since the original Google paper, techniques to rank pages have been simplified [ APC2003 ]. It is also possible to compute some of the global statistics needed in BM25F or DFR using the single round model. More details on the exact ranking mechanisms used by Yioop and be found on the Yioop Ranking Mechanisms page.

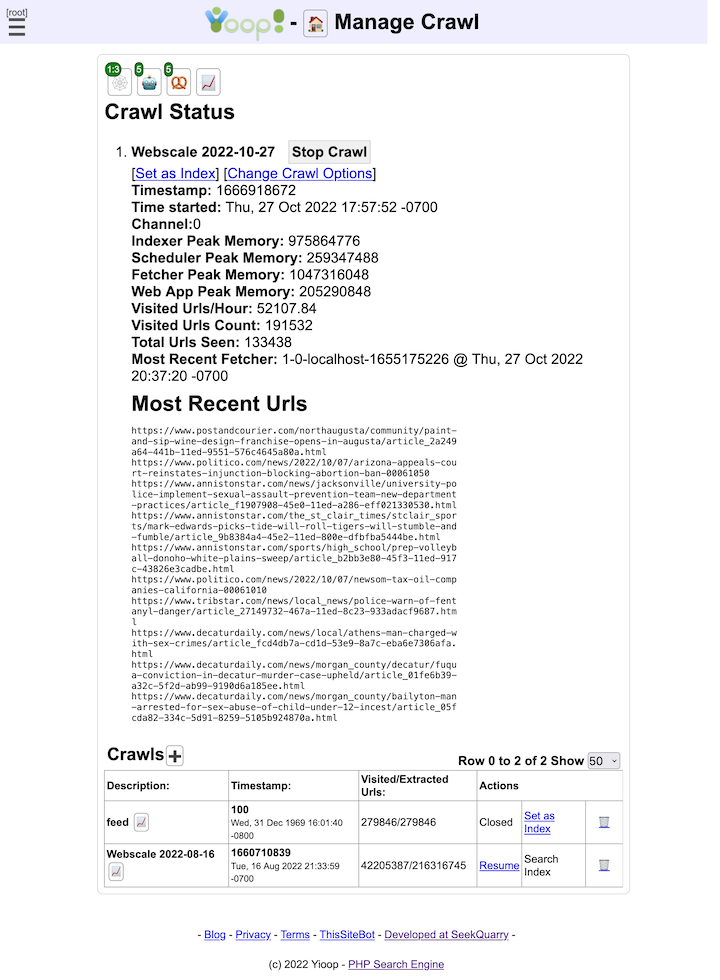

Yioop tries to exploit these advances to use a simplified distributed model which might be easier to deploy in a smaller setting. Each node in a Yioop system is assumed to have a web server running. One of the Yioop nodes web app's is configured to act as a coordinator for crawls. It is called the name server . In addition to the name server, one might have several processes called queue servers that perform scheduling and indexing jobs, as well as fetcher processes which are responsible for downloading pages and the page processing such as stemming, char-gramming and segmenting mentioned above. Through the name server's web app, users can send messages to the queue servers and fetchers. This interface writes message files that queue servers periodically looks for. Fetcher processes periodically ping the name server to find the name of the current crawl as well as a list of queue servers. Fetcher programs then periodically make requests in a round-robin fashion to the queue servers for messages and schedules. A schedule is data to process and a message has control information about what kind of processing should be done. A given queue server is responsible for generating schedule files for data with a certain hash value, for example, to crawl urls for urls with host names that hash to queue server's id. As a fetcher processes a schedule, it periodically POSTs the result of its computation back to the responsible queue server's web server. The data is then written to a set of received files. The queue server as part of its loop looks for received files and merges their results into the index so far. So the model is in a sense one round: URLs are sent to the fetchers, summaries of downloaded pages are sent back to the queue servers and merged into their indexes. As soon as the crawl is over one can do text search on the crawl. Deploying this computation model is relatively simple: The web server software needs to be installed on each machine, the Yioop software (which has the the fetcher, queue server, and web app components) is copied to the desired location under the web server's document folder, each instance of Yioop is configured to know who the name server is, and finally, the fetcher programs and queue server programs are started.

As an example of how this scales, 2018 Mac Mini running a queue server program can schedule and index about 60,000 pages/hour. This corresponds to the work of about 4 fetcher processes (roughly, you want 1GB and 1core/fetcher). The checks by fetchers on the name server are lightweight, so adding another machine with a queue server and the corresponding additional fetchers allows one to effectively double this speed. This also has the benefit of speeding up query processing as when a query comes in, it gets split into queries for each of the queue server's web apps, but the query only "looks" slightly more than half as far into the posting list as would occur in a single queue server setting. To further increase query throughput, the number queries that can be handled at a given time, Yioop installations can also be configured as "mirrors" which keep an exact copy of the data stored in the site being mirrored. When a query request comes into a Yioop node, either it or any of its mirrors might handle it.

Since a multi-million page crawl involves both downloading from the web rapidly over several days, Yioop supports the ability to dynamically change its crawl parameters as a crawl is going on. This allows a user on request from a web admin to disallow Yioop from continuing to crawl a site or to restrict the number of urls/hours crawled from a site without having to stop the overall crawl. One can also through a web interface inject new seed sites, if you want, while the crawl is occurring. This can help if someone suggests to you a site that might otherwise not be found by Yioop given its original list of seed sites. Crawling at high-speed can cause a website to become congested and unresponsive. As of Version 0.84, if Yioop detects a site is becoming congested it can automatically slow down the crawling of that site. Finally, crawling at high-speed can cause your domain name server (the server that maps www.yioop.com to 173.13.143.74) to become slow. To reduce the effect of this Yioop supports domain name caching.

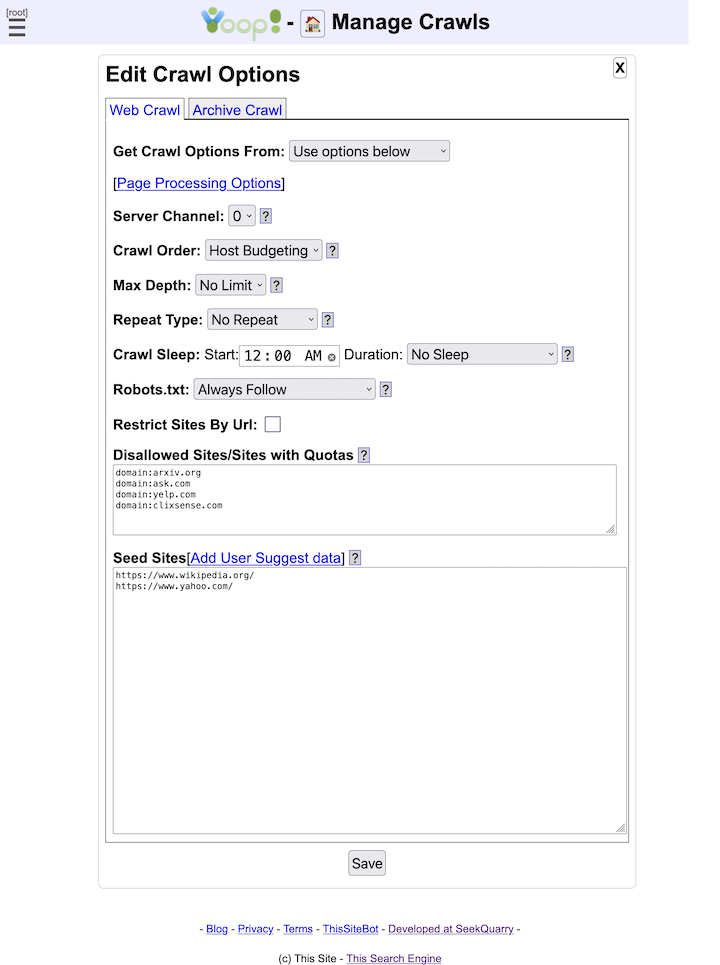

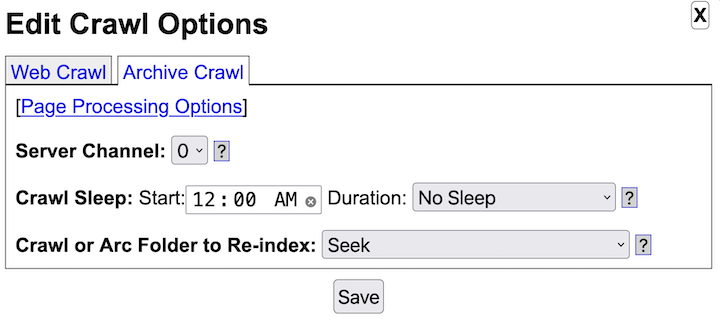

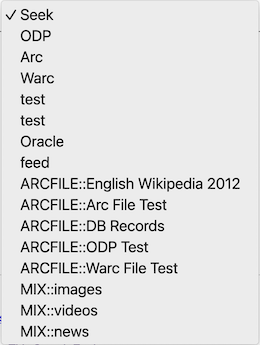

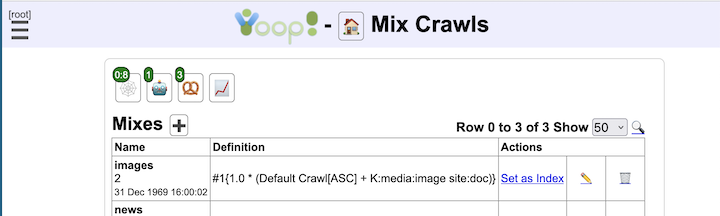

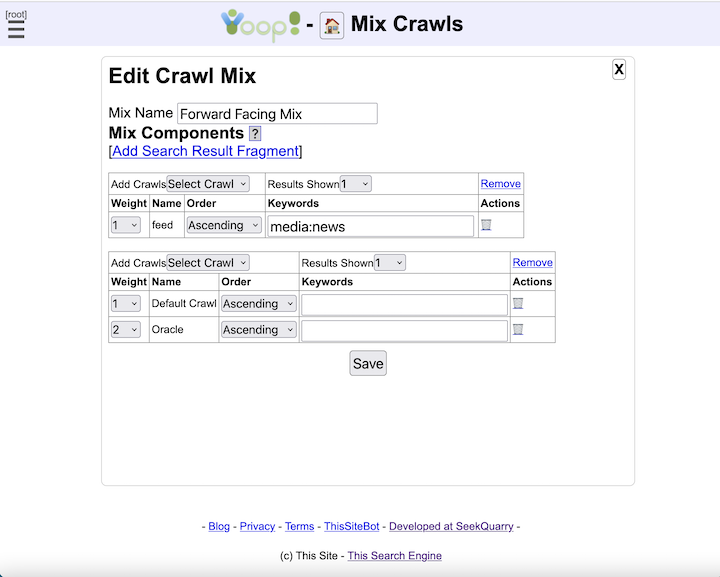

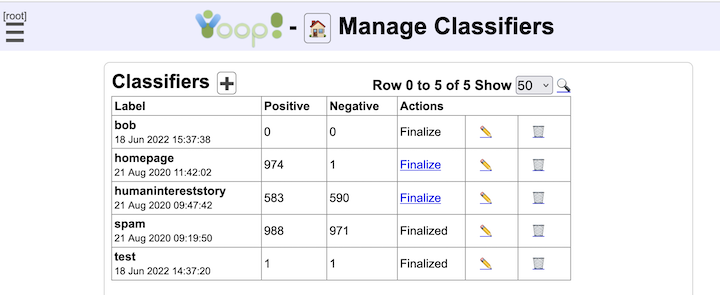

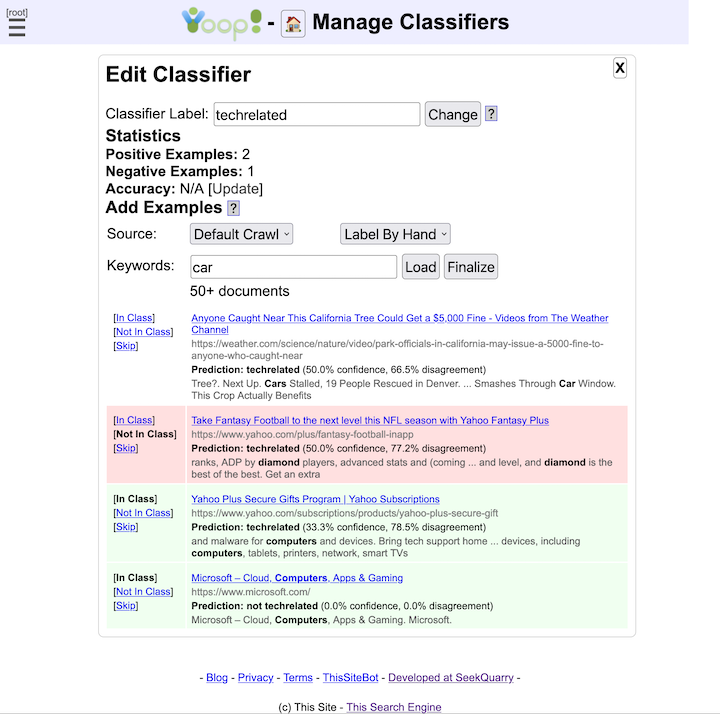

Despite its simpler one-round model, Yioop does a number of things to improve the quality of its search results. While indexing, Yioop can make use of Lasso regression classifiers [ GLM2007 ] using data from earlier crawls to help label and/or rank documents in the active crawl. Yioop also takes advantage of the link structure that might exist between documents in a one-round way: For each link extracted from a page, Yioop creates a micropage which it adds to its index. This includes relevancy calculations for each word in the link as well as an [ APC2003 ]-based ranking of how important the link was. Yioop supports a number of iterators which can be thought of as implementing a stripped-down relational algebra geared towards word-document indexes. One of these operators allows one to make results from unions of stored crawls. This allows one to do many smaller topic specific crawls and combine them with your own weighting scheme into a larger crawl. A second useful operator allows you to display a certain number of results from a given subquery, then go on to display results from other subqueries. This allows you to make a crawl presentation like: the first result should come from the open crawl results, the second result from Wikipedia results, the next result should be an image, and any remaining results should come from the open search results. Yioop comes with a GUI facility to make the creation of these crawl mixes easy. To speed up query processing for these crawl mixes one can also create materialized versions of crawl mix results, which makes a separate index of crawl mix results. Another useful operator Yioop supports allows one to perform groupings of document results. In the search results displayed, grouping by url allows all links and documents associated with a url to be grouped as one object. Scoring of this group is a sum of all these scores. Thus, link text is used in the score of a document. How much weight a word from a link gets also depends on the link's rank. So a low-ranked link with the word "stupid" to a given site would tend not to show up early in the results for the word "stupid". Grouping also is used to handle deduplication: It might be the case that the pages of many different URLs have essentially the same content. Yioop creates a hash of the web page content of each downloaded url. Amongst urls with the same hash only the one that is linked to the most will be returned after grouping. Finally, if a user wants to do more sophisticated post-processing such as clustering or computing page rank, Yioop supports a straightforward architecture for indexing plugins.

There are several open source crawlers, indexers, and front ends which can scale to crawls in the millions to hundred of millions of pages. Most of these are written in Java, C, C++, C#, not PHP. Three important ones are Nutch/ Lucene/ Solr [ KC2004 ], YaCy, and Heritrix [ MKSR2004 ]. Nutch is the original application for which the Hadoop infrastructure described above was developed. Nutch is a crawler, Lucene is for indexing, and Solr is a search engine front end. The YaCy project uses an interesting distributed hash table peer-to-peer approach to crawling, indexing, and search. Heritrix is a web crawler developed at the Internet Archive. It was designed to do archival quality crawls of the web. Its ARC file format inspired the use of PartitionDocumentBundle's in Yioop. These bundle folder consists of a sequence container files in Yioop's file format for storing web pages, web summary data, url lists, and other kinds of data used by Yioop. Yioop's container format consists of a sequence of compressed records stored using the PackectTableTool class. These records are a little difference than Arc files in that their blob columns are serialized PHP objects rather than pure web page data. One nice aspect of using serialized PHP objects versus serialized Java Objects is that they are humanly readable text strings, and so if one decompresses one of the container files they are still relatively intelligible. The main purpose of PartitionDocumentBundle is to allow one to store many small files compressed as one big file. They also make data from web crawls very portable, making them easy to copy from one location to another. Like Nutch and Heritrix, Yioop also has a command-line tool for quickly looking at the contents of such archive objects.

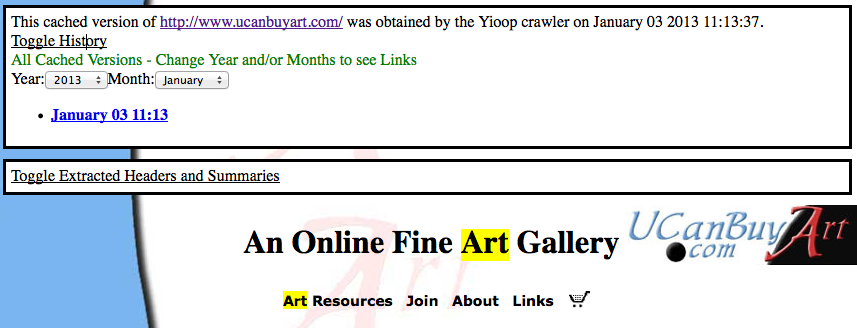

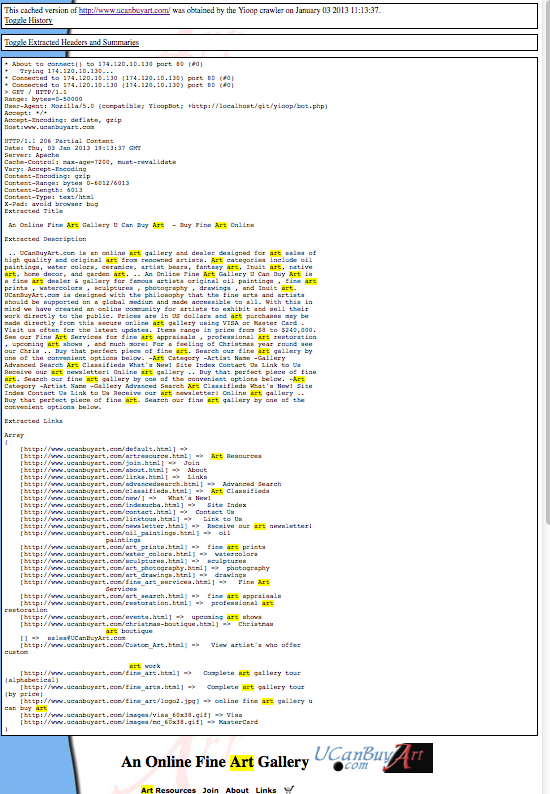

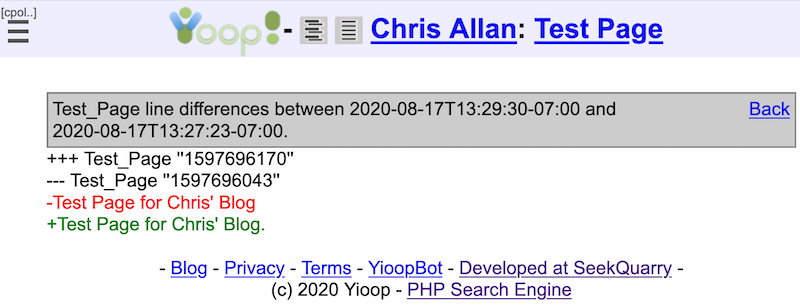

The ARC format is one example of an archival file format for web data. Besides at the Internet Archive, ARC and its successor WARC format are often used by TREC conferences to store test data sets such as GOV2 and the ClueWeb 2009 / ClueWeb 2012 Datasets. In addition, it was used by grub.org (hopefully, only on a temporary hiatus), a distributed, open-source, search engine project in C#. Another important format for archiving web pages is the XML format used by Wikipedia for archiving MediaWiki wikis. Wikipedia offers creative common-licensed downloads of their site in this format. Curlie.org, formerly the Open Directory Project, makes available its ODP data sets in an RDF-like format licensed using the Open Directory License. Thus, we see that there are many large scale useful data sets that can be easily licensed. Raw data dumps do not contain indexes of the data though. This makes sense because indexing technology is constantly improving and it is always possible to re-index old data. Yioop supports importing and indexing data from ARC, WARC, database queries results, MediaWiki XML dumps, and Open Directory RDF. Yioop further has a generic text importer which can be used to index log records, mail, Usenet posts, etc. Yioop also supports re-indexing of old Yioop data files created after version 0.66, and indexing crawl mixes. This means using Yioop you can have searchable access to many data sets as well as have the ability to maintain your data going forward. When displaying caches of web pages in Yioop, the interface further supports the ability to display a history of all cached copies of that page, in a similar fashion to Internet Archives interface.

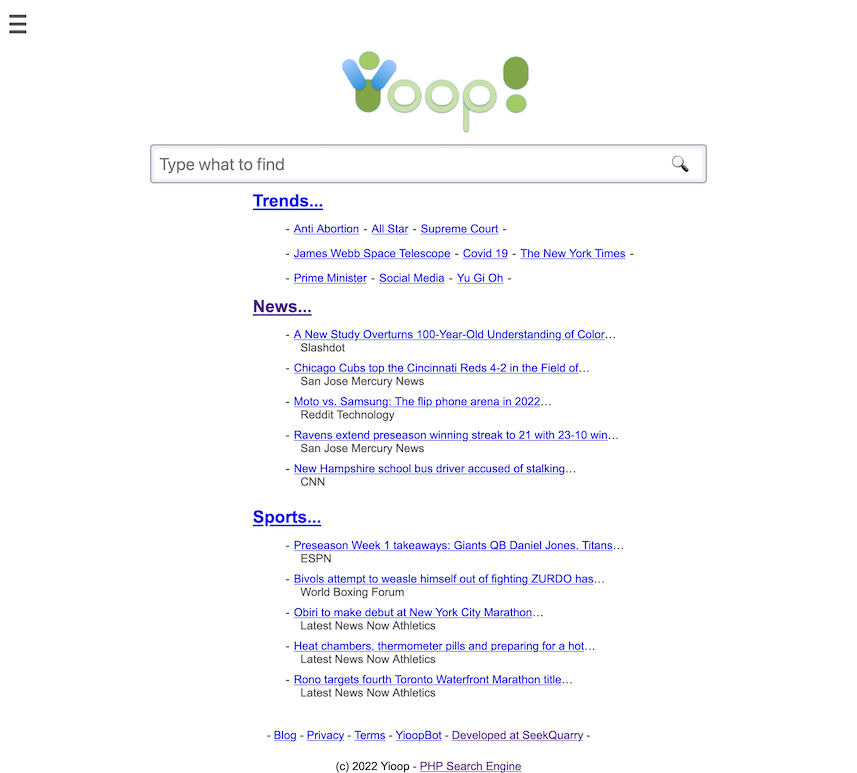

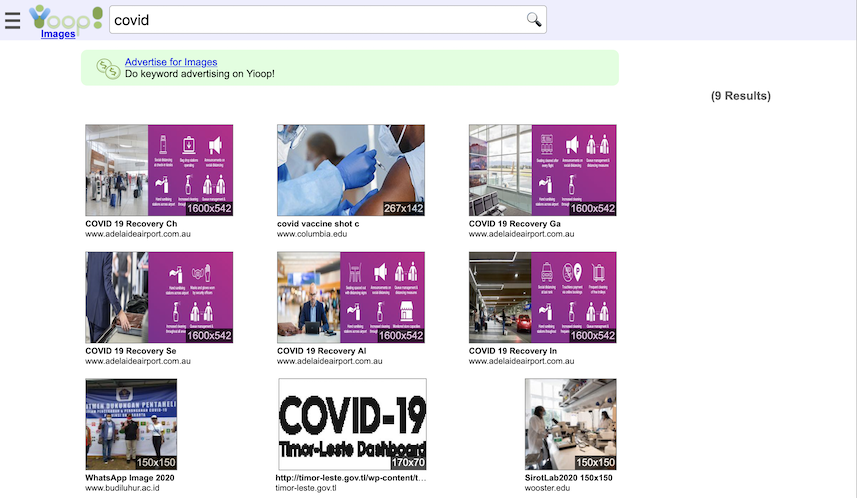

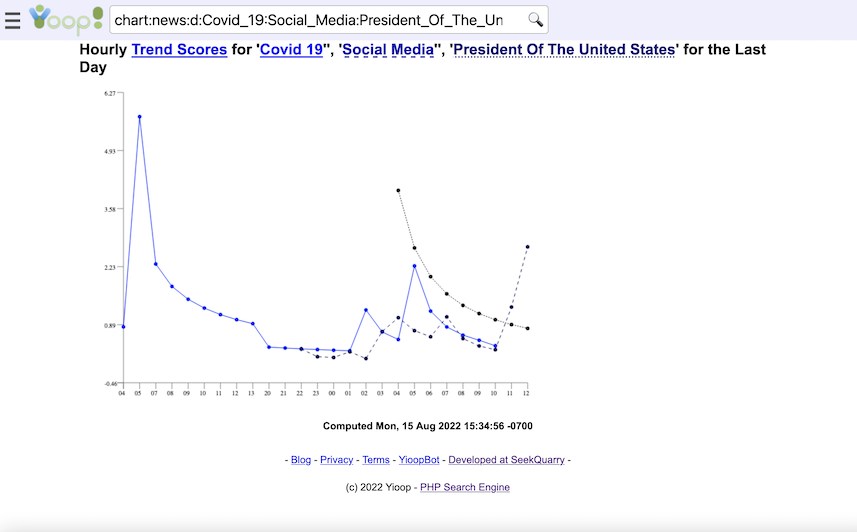

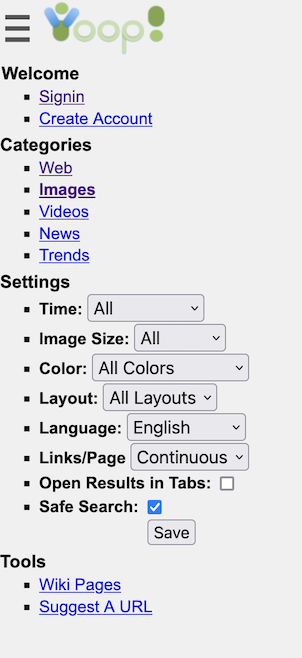

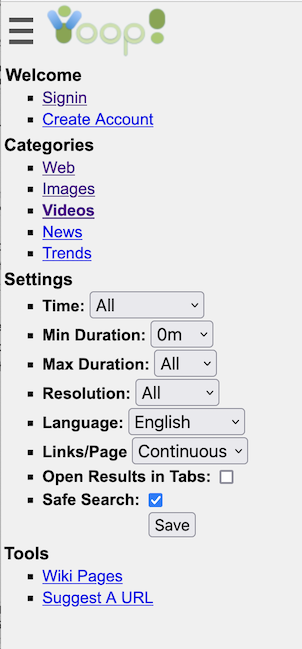

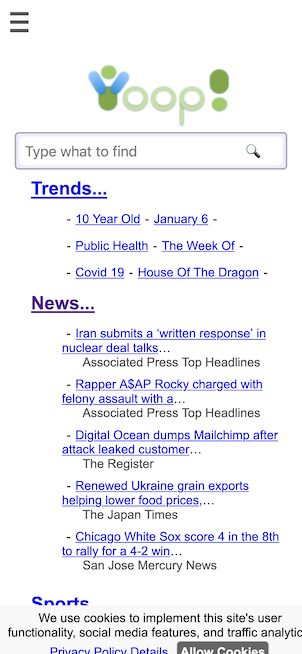

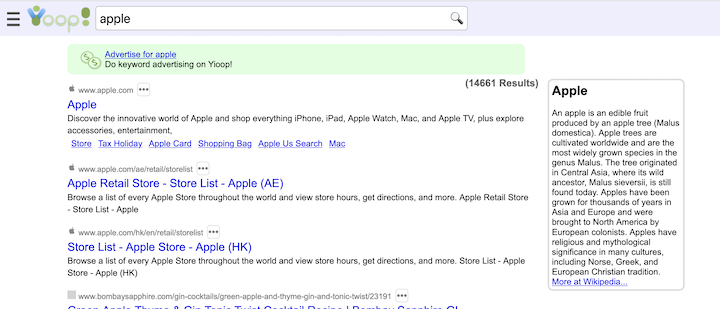

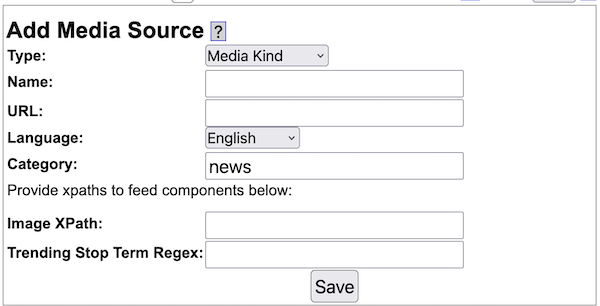

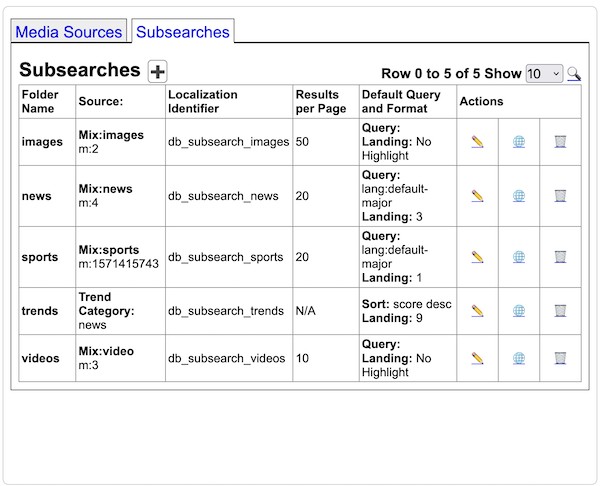

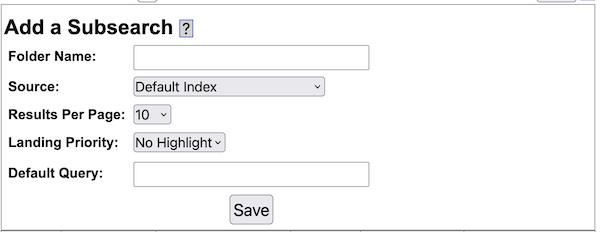

Another important aspect of creating a modern search engine is the ability to display in an appropriate way various media sources. Yioop comes with built-in subsearch abilities for images, where results are displayed as image strips; video, where thumbnails for video are shown; news, where news items can be grouped together by category (Headlines, Sports, Entertainment) and a configurable set of news/twitter feeds can be set to be updated on an hourly basis; and trends, where statistics about keywords in news items or on web pages change in time are presented.

This concludes the discussion of how Yioop fits into the current and historical landscape of search engines and indexes.

Feature List

The feature changes between different versions of Yioop can be found on the Changelog page. Here is a summary of the main features of the current version of Yioop:

- General

- Yioop is an open-source, distributed crawler and search engine written in PHP and fully updated to work with PHP 8.1.

- Yioop has its own built-in web server so can be deployed with or without a web server such as Apache. Yioop also has been tested to work when Apache is configured to use HTTP/2.0.

- Crawling, indexing, and serving search results can be done on a single machine or distributed across several machines.

- The fetcher/queue server processes on several machines can be managed through the web interface of a main Yioop instance.

- Yioop installations can be created with a variety of topologies: one queue server and many fetchers or several queue servers and many fetchers.

- Using web archives, crawls can be mirrored amongst several machines to speed-up serving search results. This can be further sped-up by using filecache.

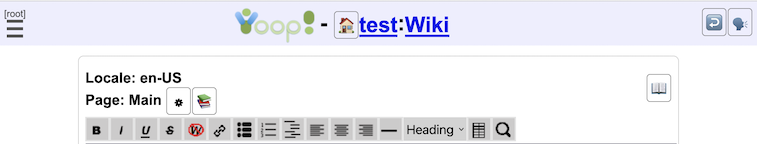

- Yioop can be used to create web sites via its own built-in wiki system. For more complicated customizations, Yioop's model-view-adapter framework is designed to be easily extendible. This framework also comes with a GUI which makes it easy to localize strings and static pages.

- The appearance of a Yioop web site can also be controlled in Appearance activity which also lets one create customized CSS themes for the site.

- Yioop can be used as a package in other project using Composer .

- Yioop search result and feed pages can be configured to display banner or skyscraper ads through an Site Admin GUI (if desired).

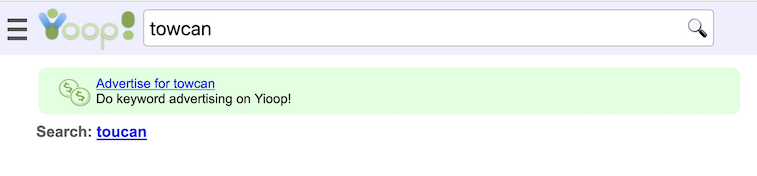

- Yioop search result and feed pages can also be configured to use Yioop's built-in keyword advertising system.

- Yioop has been optimized to work well with smart phone web browsers and with tablet devices.

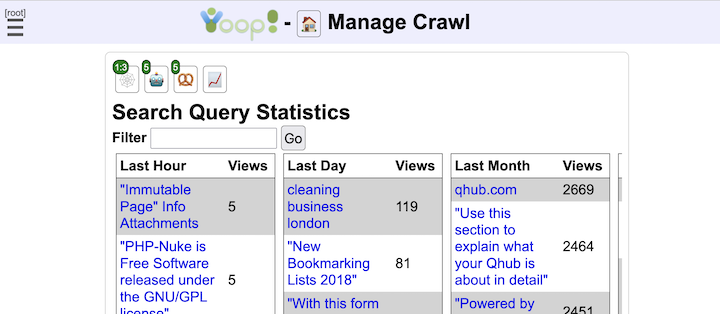

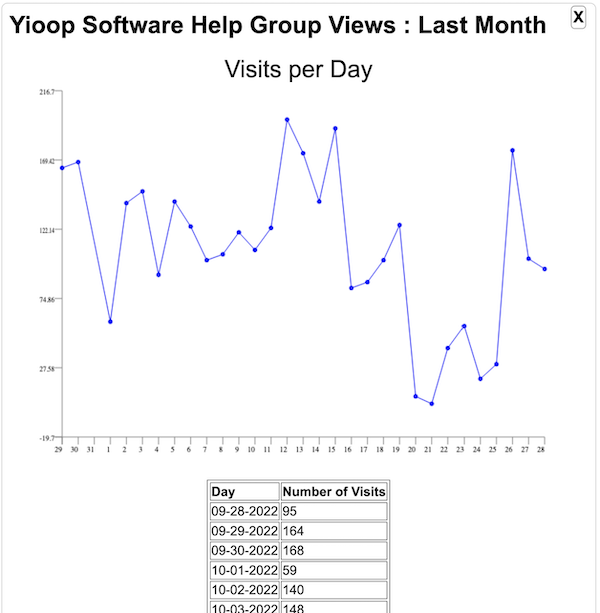

- Yioop has a built in analytics system which when running can be used to track search queries, wiki page views, and discussion board views.

- To help ensure privacy when search and discussion board aggregate data is displayed Yioop can make use of a differential privacy subsystem.

- Yioop can be configured using its web interface including image, audio, and video serving.

- Social and User Interface

- Yioop can be configured to allow or not to allow users to register for accounts.

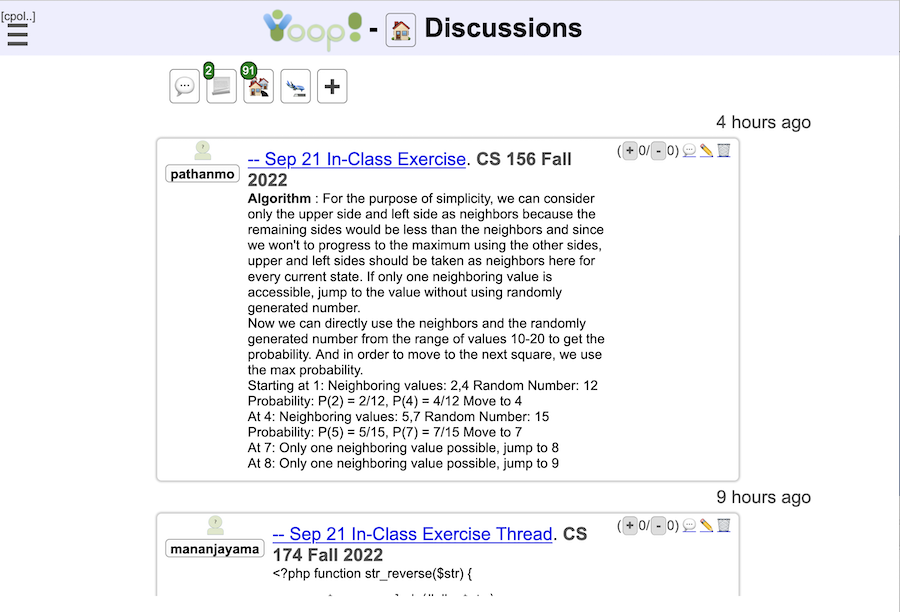

- If allowed, user accounts can create discussion groups, blogs, and wikis.

- On a per group basis, monetization in Yioop is also supported by charging a credit fee to join groups.

- Blogs and wiki support attaching images, videos, and files, have a facility for displaying QR codes, and also support including math using LaTeX or AsciiMathML.

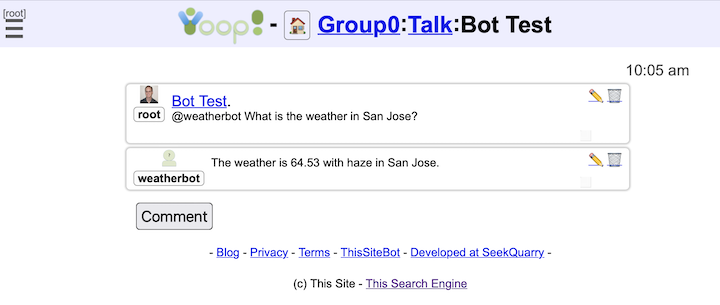

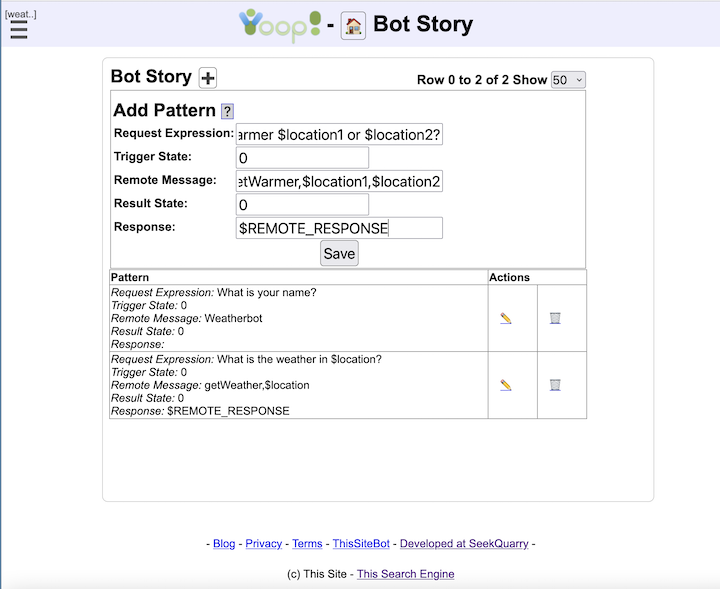

- Discussion boards support Chat Bots and Yioop has an API for integrating Chat Bot Users into groups.

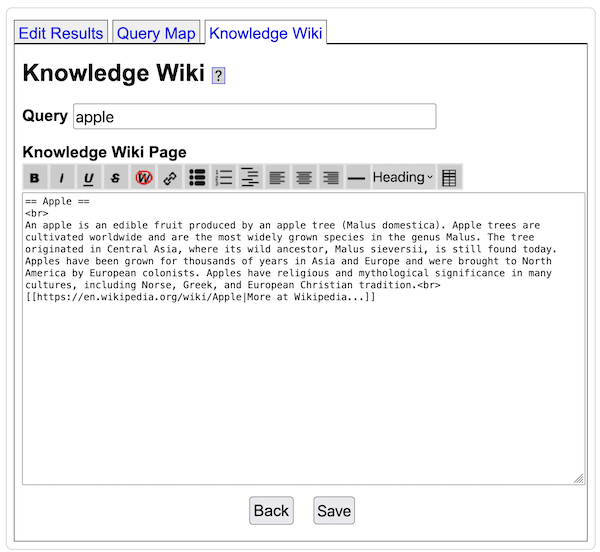

- Yioop comes with three built in groups: Help, Search, and Public. Help's wiki pages allow one to customize the integrated help throughout the Yioop system. Search wiki pages are used to customize callouts next to search results. The feed system is used for editing search results. The Public Groups discussion can be used as a site blog, its wiki page can be used to customize the look-and-feel of the overall Yioop site without having to do programming.

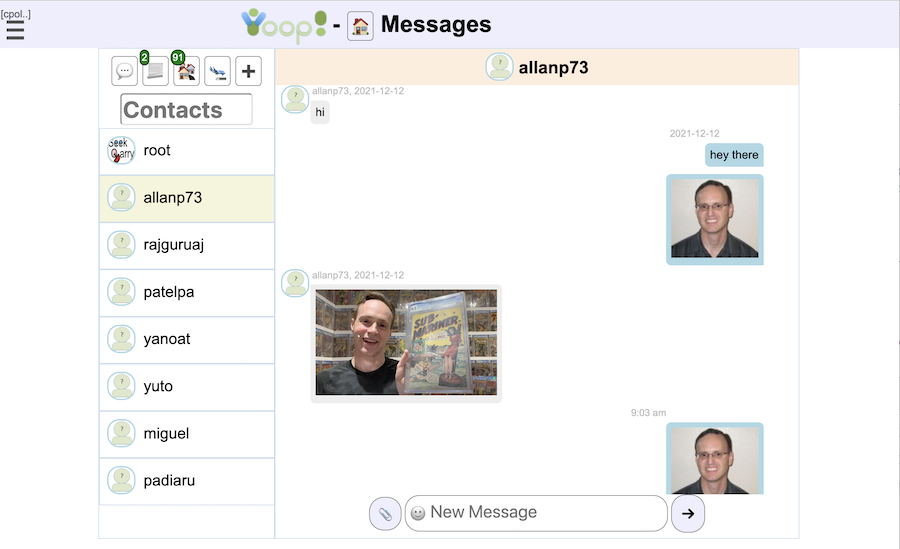

- Each user account also gets a personal group. The feed system of this is used for user-to-user messaging. The wiki system of this is used for an individual users clip board, if the user want to move wiki pages between groups.

- Data from non-Yioop discussion boards, if presented as RSS, can be imported into a Yioop discussion group.

- Wiki pages support different types such as standard wiki page, wiki page with discussions, media gallery, page alias, share wall, slide presentation,and URL Shortener. Media galleries can be used to present lists of videos together with whether they have been watched, and if so, how far -- much like Kodi.

- Comma Separated Value files (CSV) can be edited like spreadsheets and can include equations. Values from CSV files can be embedded into wiki documents in a natural way.

- Yioop supports a wiki form syntax that allows wiki pages to have customized forms on them. When a user submit data from a wiki form in gets stored in a file form_data.csv in the pages resourse folder.

- Video on wiki pages and in discussion posts is served using HTTP-pseudo streaming so users can scrub through video files. For uploaded video files below a configurable size limit, videos are automatically converted to web friendly mp4 format, provided the distributed version of the media updater is in use.

- If FFMPEG is configured thumbnails of videos will show animated snippets. If ImageMagick is configured then thumbnails for PDFs will be displayed, If CALIBRE is configured, then thumbnails for epub and html documents will be displayed.

- Wiki media gallery pages of video resources output Open Graph info about the video.

- Yioop detects automatically if a video has associated with it a VTT captioning or subtitling file and can serve videos with captions and subtitles.

- PDF and Epub files can be displayed automatically on gallery pages, allowing simple e-reading which remembers where a user was last reading.

- Wiki pages can be configured to have auto-generated tables of contents, to make use of common headers and footers, and to output meta tags for SEO purposes.

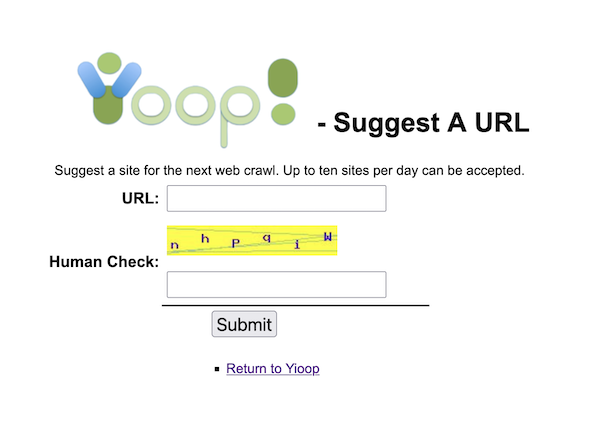

- If user accounts are enabled, Yioop has a search tools page on which people can suggest urls to crawl.

- Yioop has three different captcha'ing mechanisms that can be used in account registration and for suggest urls: a standard graphics-based captcha, a text-based captcha, and a hash-cash-like catpha.

- Search

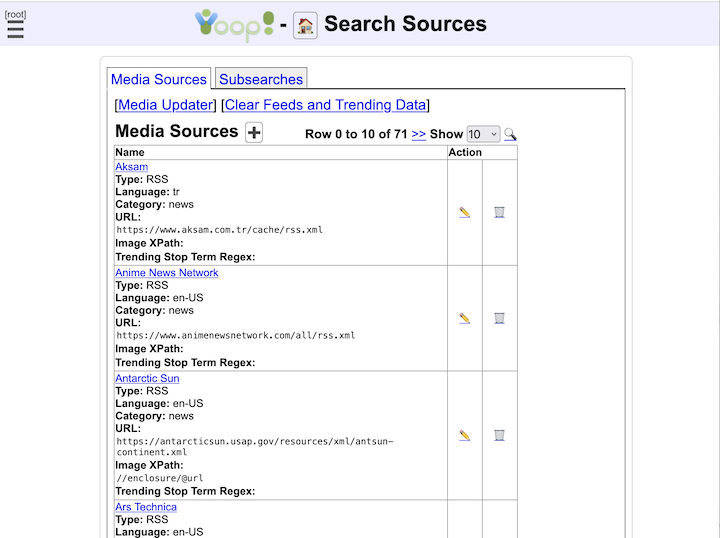

- Yioop supports subsearches geared towards presenting certain kinds of media such as images, video, and news. The list of news sites and how videos are scraped can be configured through the GUI. Yioop has a media updater process which can be used to automatically update news and general media feeds hourly. It can also be used to download podcasts to wiki pages.

- Yioop has a trending page for what words were most popular in the news feeds at different time scales.

- New and media feeds can either be RSS feeds, Atom feeds, JSON feeds, or can be scraped from an HTML page using XPath queries or can be scraped using a regular expression scaper. What image is used for a news feed item can also be configured using XPath queries.

- Several hourly processes such as news updates, podcast updates, video conversion, and mail delivery can be configured to be distributed across several machines automatically.

- Yioop determines search results using a number of iterators which can be combined like a simplified relational algebra.

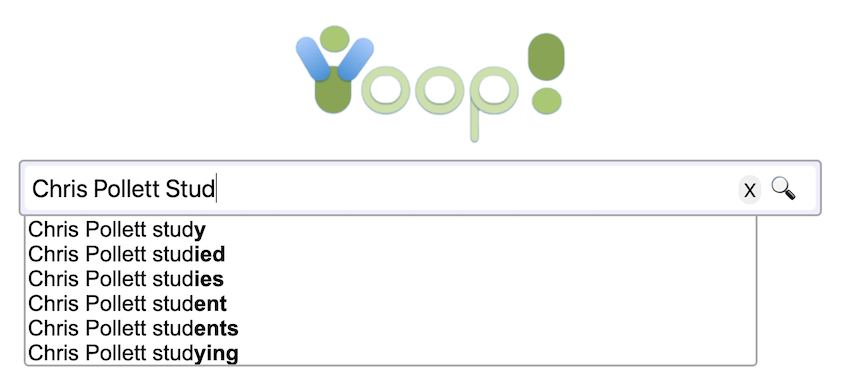

- Yioop can be configured to display word suggestions as a user types a query. It can also suggest spell corrections for mis-typed queries. This feature can be localized.

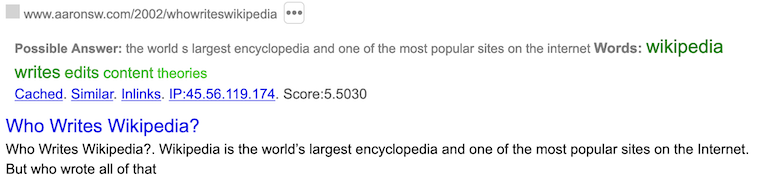

- Yioop has a built-in triplet extraction system for English which allows for a limited form of Question Answering within Yioop for English.

- Yioop supports language detection of queries and web pages and safe search of web pages.

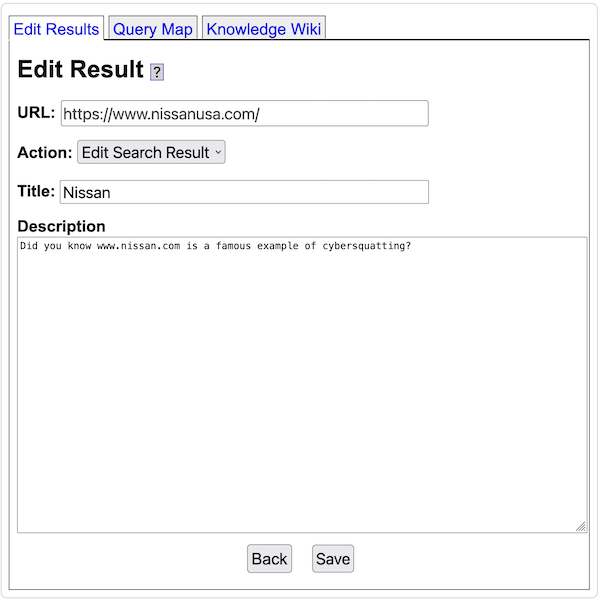

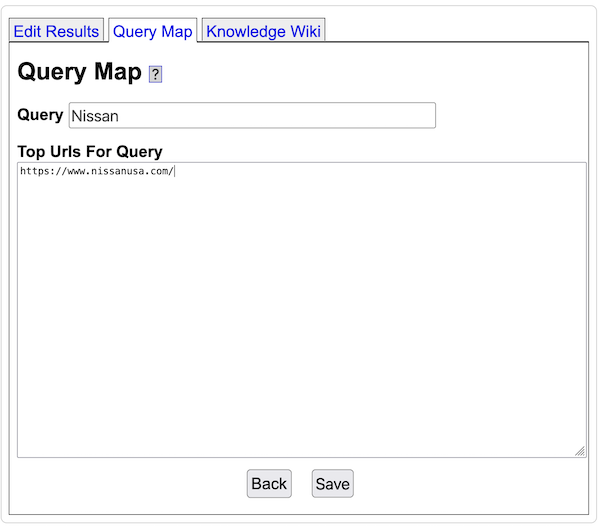

- Yioop supports the ability to filter out urls from search results after a crawl has been performed. It also has the ability to edit summary information that will be displayed for urls.

- A given Yioop installation might have several saved crawls and it is very quick to switch between any of them and immediately start doing text searches.

- Besides the standard output of a web page with ten links, Yioop can output query results in Open Search RSS format, a JSON variant of this format, and also to query Yioop data via a function api.

- Indexing

- Yioop is capable of indexing small sites to sites or collections of sites containing low hundreds of millions of documents.

- Yioop indexes are positional rather than bag of word indexes, and uses a index compression scheme called Modified9 is used.

- Yioop has a web interface which makes it easy to combine results from several crawl indexes to create unique result presentations. These combinations can be done in a conditional manner using "if:" meta words.

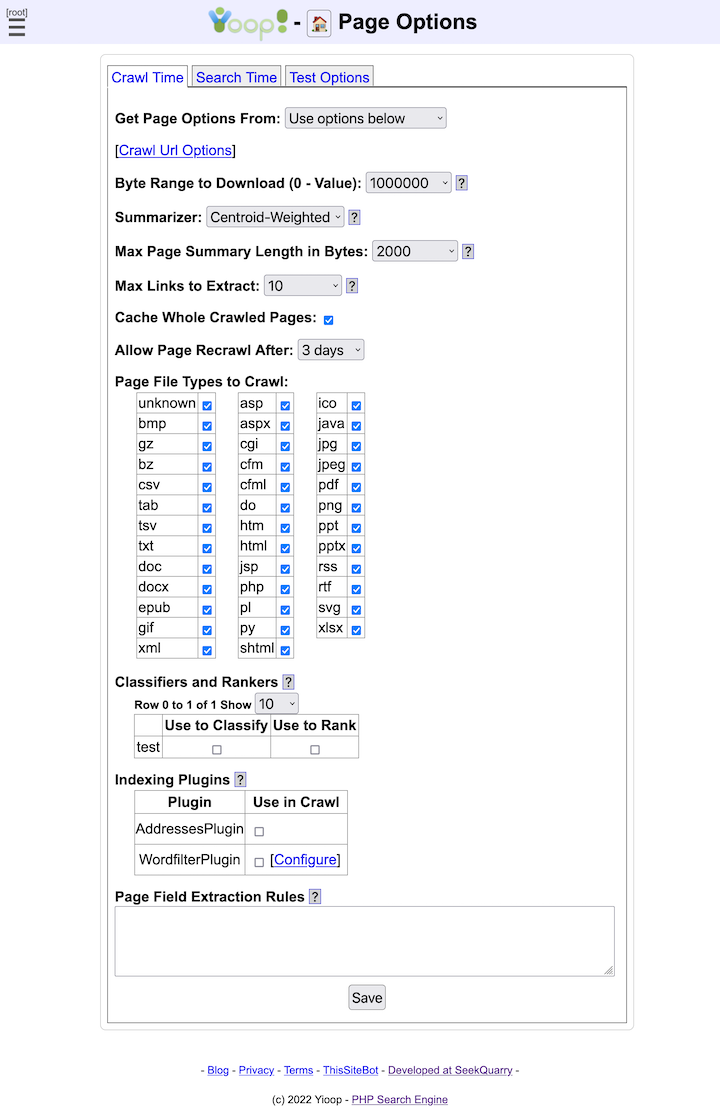

- Yioop supports the indexing of many different filetypes including: HTML, Atom, BMP, DOC, DOCX ePub, GIF, JPG, PDF, PPT, PPTX, PNG, RSS, RTF, sitemaps, SVG, XLSX, and XML. It has a web interface for controlling which amongst these filetypes (or all of them) you want to index. It supports also attempting to extract information from unknown filetypes.

- Yioop supports extracting data from zipped formats like DOCX even if it only did a partial download of the file.

- Yioop has a simple page rule language for controlling what content should be extracted from a page or record.

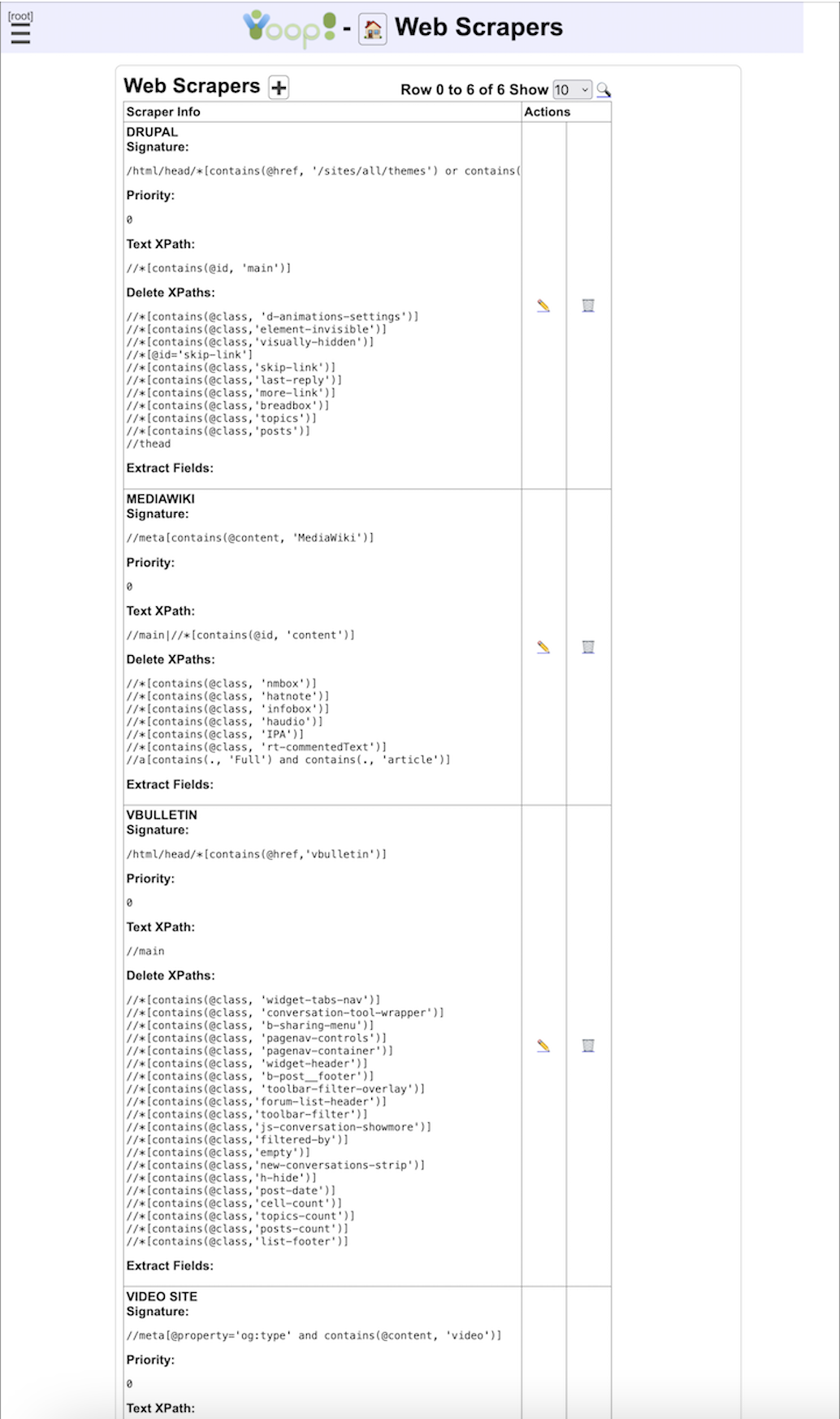

- Users can define rules for web scraping content from particular kinds of web sites such as Wordpress, Drupal, and other CMS systems and users can define rules to scrape different kinds of meta information such as Open Graph tags.

- Yioop has four different kinds of text summarizers which can be used to further affect what words are index: a basic tag-based summarizer, a graph-based summarizer, and a centroid and weighted-centroid algorithm summarizer. It can generate word clouds from summarized documents.

- Indexing occurs as crawling happens, so when a crawl is stopped, it is ready to be used to handle search queries immediately.

- Yioop Indexes can be used to create classifiers which then can be used in labeling and ranking future indexes.

- Yioop comes with stemmers for English, Arabic, Dutch, French, German, Greek, Hindi, Italian, Persian, Portuguese, Russian, and Spanish, and a word segmenter for Chinese. It uses char-gramming for other languages. Yioop has a simple architecture for adding stemmers for other languages.

- Yioop uses a web archive file format which makes it easy to copy crawl results amongst different machines. It has a command-line tool for inspecting these archives if they need to examined in a non-web setting. It also supports command-line search querying of these archives.

- Yioop supports an indexing plugin architecture to make it possible to write one's own indexing modules that do further post-processing.

- Web and Archive Crawling

- Yioop supports open web crawls, but through its web interface one can configure it also to crawl only specifics site, domains, or collections of sites and domains.

- Yioop supports crawling a site up to a certain depth starting from seed nodes.

- Yioop supports general repeating crawls. These crawls have a repeat frequency and two indexes: one for searching for crawling and Yioop automatically switches between the two every repeat period.

- Yioop supports multiple simultaneous crawls by assigning machines to channels and then scheduling crawls to those channels.

- Yioop uses multi-curl to support many simultaneous downloads of pages.

- Yioop obeys robots.txt files by default including Google and Bing extensions such as the Crawl-delay and Sitemap directives as well as * and $ in allow and disallow. It further supports the robots meta tag directives NONE, NOINDEX, NOFOLLOW, NOARCHIVE, and NOSNIPPET and the link tag directive rel="canonical". It also supports anchor tags with rel="nofollow" attributes. It also supports X-Robots-Tag HTTP headers. Finally, it tries to detect if a robots.txt became a redirect due to congestion.

- Yioop can be configured to relax how it obeys robots.txt to allow it to always download landing page, or to completely ignore the robots.txt if one wants.

- Yioop sends referer headers when crawling pages which when not present are used by some CDNs to block robots.

- Yioop comes with a word indexing plugin which can be used to control how Yioop crawls based on words on the page and the domain. This is useful for creating niche subject specific indexes.

- Yioop has its own DNS caching mechanism and it adjusts the number of simultaneous downloads it does in one go based on the number of lookups it will need to do.

- Yioop can crawl over HTTP, HTTPS, and Gopher protocols. Yioop is configured to try to do HTTP requests over HTTP/2.0 before falling back to HTTP/1.1 or HTTP/1.0.

- Yioop supports crawling TOR networks (.onion urls).

- Yioop supports crawling through a list of proxy servers.

- Yioop supports crawling Git Repositories and can index Java and Python code.

- Yioop supports crawl quotas for web sites. I.e., one can control the number of urls/hour downloaded from a site.

- Yioop can detect website congestion and slow down crawling a site that it detects as congested.

- Yioop supports dynamically changing the allowed and disallowed sites while a crawl is in progress. Yioop also supports dynamically injecting new seeds site via a web interface into the active crawl.

- Yioop has a web form that allows a user to control the recrawl frequency for a page during a crawl.

- Yioop keeps track of ETag: and Expires: HTTP headers to avoid downloading content it already has in its index.

- Yioop supports importing data from ARC, WARC, database queries, MediaWiki XML, and ODP RDF files. It has generic importing facility to import text records such as access log, mail log, usenet posts, etc., which are either not compressed, or compressed using gzip or bzip2. It also supports re-indexing of data from previous crawls.

Set-up

Requirements

Yioop can be configured to run using its internal web-server or using an external web server. Run as its own web server, Yioop requires: (1) PHP 8.0.8 or better, (2) Curl libraries enabled in PHP, (3) sqlite support enabled in PHP, (4) multi-byte string support enabled in PHP, (5) xml support enabled in PHP, (6) GD graphic libraries enabled in PHP, (7) openssl support enabled in PHP, (8) Zip archive support. Most of these features are enabled in PHP by default, but you should still check your configuration. In a third party we server setting, the Yioop search engine requires in addition: (1) a web server, (2) Rewrites enabled. To be a little more specific Yioop has been tested with Apache 2.2, Apache 2.4 and I've been told Version 0.82 or newer works with lighttpd. It should work with other webservers, although it might take some finessing. One way to install a webserver is using a package manager such as the Advanced Package Tool (apt) on Linux, Homebrew on Mac, or Chocolatey on Windows. For Windows, Mac, and Linux, another easy way to get the required software is to download a Apache/PHP/MySql suite such as MAMP or WAMP.

On any platform, if you decide to run Yioop under Apache, make sure that the Apache mod_rewrite module is enabled and that .htaccess files work for the directory in question (often this is the case by default with packages such as MAMP or WAMP, but please check the Yioop install guide for the platform in question in order to see if anything is required). It is possible to get Yioop to work without mod_rewrite. To do so, you can use the src directory location as the url for Yioop, however, URLs in this scenario used by your installation will look uglier.

To set up PHP you need to locate the configuration php.ini file of your php installation, and make sure that curl support is enabled. For example, on a Windows machine this migh involve changing the line:

;extension=php_curl.dllto

extension=php_curl.dllThe php.ini file has a post_max_size setting you might want to change. You might want to change it to:

post_max_size = 32MYioop will work with the post_max_size set to as little as two megabytes bytes, but will be faster with the larger post capacity. If you intend to make use of Yioop Discussion Groups and Wikis and their ability to upload documents, you might want to consider also adjusting the value of the variable upload_max_filesize . This value should be set to at most what you set post_max_size to.

If you are using the Ubuntu-variant of Linux, the following lines would get the software you need to run Yioop without a web server:

sudo apt install curl sudo apt install php-bcmath sudo apt install php-cli sudo apt install php-curl sudo apt install php-gd sudo apt install php-intl sudo apt install php-mbstring sudo apt install php-sqlite sudo apt install php-xml sudo apt install php-zip With a web server, you should also install: sudo apt install apache2 sudo apt install php sudo apt install libapache2-mod-php sudo a2enmod php sudo a2enmod rewrite

If you decide to use a different database than sqlite, you need the appropriate php driver. For example, for mysql: sudo apt install php-mysql

In addition to the minimum installation requirements above, if you want to use the Manage Machines feature in Yioop, you may need to do some additional configuration. Namely, some sites disable the PHP popen, pclose, and exec functions, and so you might have to edit your php.ini file to enable these functions if you want this activity to work. The Manage Machines activity allows you through a web interface to start/stop and look at the log files for each of the QueueServer's, and Fetchers that you want Yioop to manage. It also allows you to start and stop the Media Updater process/processes. If it is not configured then these task would need to be done via the command line. Also, if you do not use the Manage Machine interface your Yioop site can make use of only one QueueServer.

As a final step, after installing the necessary software, make sure to start/restart your web server and verify that it is running.

Memory Requirements

Yioop sets for its processes certain upper bounds on the amounts of memory they can use. These are partially calculated based on the amount of available memory your computer has. For machines with at least 8GB of RAM,

by default src/executables/QueueServer.php 's limit is set to 4GB, src/executables/Fetcher.php 's limit is set to 2GB and for index.php the limit is 1GB. For a machine with 4GB of RAM the values are half of these. These values in turn affect the size of crawling objects such as the in-memory priority queue, etc. Each processes memory value is set near the tops of its files with a line like:

ini_set("memory_limit", C\FETCHER_MEMORY_LIMIT);

where the constant FETCHER_MEMORY_LIMIT is computed in src/configs/Config.php.

For the index.php file, you may need to set the limit as well in your php.ini file for the instance of PHP used by your web server. If the value is too low for the index.php Web app, you might see messages in the Fetcher logs that begin with: "Trouble sending to the scheduler at url..."

Often in a VM setting these requirements are somewhat steep. It is possible to get Yioop to work in environments like EC2 (be aware this might violate your service agreement). To reduce these memory requirements, one can manually adjust the variables NUM_DOCS_PER_GENERATION, SEEN_URLS_BEFORE_UPDATE_SCHEDULER, NUM_URLS_QUEUE_RAM, MAX_FETCH_SIZE, and URL_FILTER_SIZE in the src/configs/Config.php file

or in the file src/configs/LocalConfig.php . Experimenting with these values you should be able to trade-off memory requirements for speed in a more fine-grained manner.

LocalConfig.php File

In addition to the settings mentioned above under memory requirements, several other default settings can be overridden in a LocalConfig.php file. If you look at the src/configs/Config.php file any constant defined by a function call nsconddefine can be overriden. We list a couple useful ones here:- ALLOW_FREE_ROOT_CREDIT_PURCHASE

- allows the root account to purchase add credits for free.

- CALIBRE

- used to set the path to the ebook-convert command-line app for Calibre. This is used by Yioop's wiki system to generate thumbnails of epub and html file resources. This only works if also have IMAGE_MAGICK configured below.

- DIRECT_ADD_SUGGEST

- makes it so user suggested urls are directly added to the current crawl, rather than added only when a user clicks a link on the Manage Crawls page.

- FFMPEG

- used to set the path to FFMPEG (if it is installed) that can used to convert uploaded videos to groups to mp4. It is also used to make animated GIFs for thumbnails of videos.

- IMAGE_MAGICK

- used to set the path to the convert command-line app for ImageMagick. This is used by Yioop's wiki system to generate thumbnails of PDF file resources.

- MAX_QUERY_CACHE_TIME

- controls how long a query is cached before it is recomputed. A value

of -1 keeps it in the cache until the cache is full. - QRENCODE

- used to set the path to qrencode (if it is installed) that can used to add QR codes to wiki pages.

- ROOT_USERNAME

- allows one to change the root username to something other than root

- SERVER_CONTEXT

- used to set up the way the built-in web server works in Yioop. The value of this variable is an array of configuration parameters, for example:

['SERVER_ADMIN' => 'bob@builder.org', 'SERVER_NAME' => 'Yioop', 'SERVER_SOFTWARE' => 'YIOOP_SERVER', 'ssl' => [ 'local_cert' => 'your_cert.crt', 'cafile' => 'certificate_authority.crt', 'capath' => '/etc/ssl/certs', 'local_pk' => '/etc/ssl/private/path_to_your_private.key', 'allow_self_signed' => false, 'verify_peer' => false, ], 'USER' => 'cpollett'] # here user is the *nix user to run under. - SITE_NAME

- provides a value for the OpenGraph site_name property when displaying a video from a wiki page on your Yioop site.

- TESSERACT

- path to tesseract command line utility for recognizing text from images.

If installed, Yioop will recognize text in images and PDFs.Return to table of contents .

Installation and Configuration

The Yioop application can be obtained using the download page at seekquarry.com . After downloading and unzipping it, move the Yioop search engine software into the folder where you'd like to keep it. If you are running Yioop with an external web server such as Apache, this should be under your web server's document root. If you are not using an external web server, on Linux or Mac open a terminal shell, on a Windows machine open the command shell or Powershell. Switch into the folder of Yioop:

cd yioop_folder_pathThen type:

php index.phpor

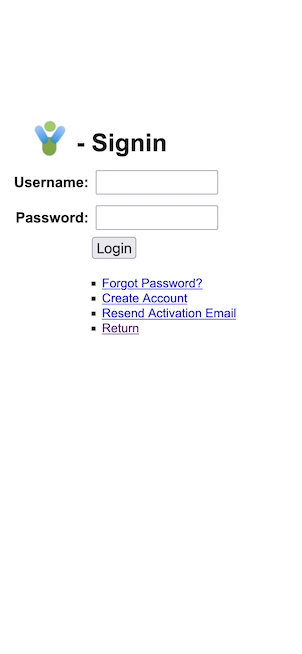

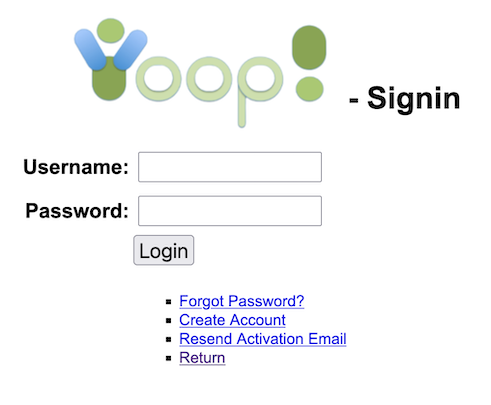

php index.php port_number_to_run_onOpen a browser, and go to the page http://localhost:8080/ . If you are running under an external web server, go to the url corresponding to where you placed Yioop under document root. You should see the default Yioop landing page:

If you don't see this page, check that your have installed and configured all the prerequisite software in the Requirements section . The most common sources

of problems are that the mod_rewrite package is not loaded for Apache, that the .htaccess file of Yioop is not being processed because Apache is configured so as not to allow overrides on that directory, or that the webserver doesn't have write permissions on the YIOOP_DIR/src/configs/Configs.php file and the YIOOP_DIR/work_directory folder . If you have gotten the above screen, then congratulations,

Yioop is installed! In this section, we do a first pass description on how to customize some general aspects of your site. This will include configuring your site for development versus production, describing your crawler to the websites you will crawl, customizing the icons, color scheme, and timezone settings of your site. The next section, describes more advanced configurations such as user registration and advertisement serving.

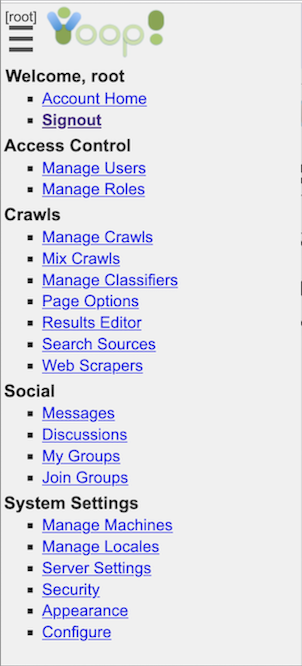

Configurations for Development, Production, Testing, and Crawling

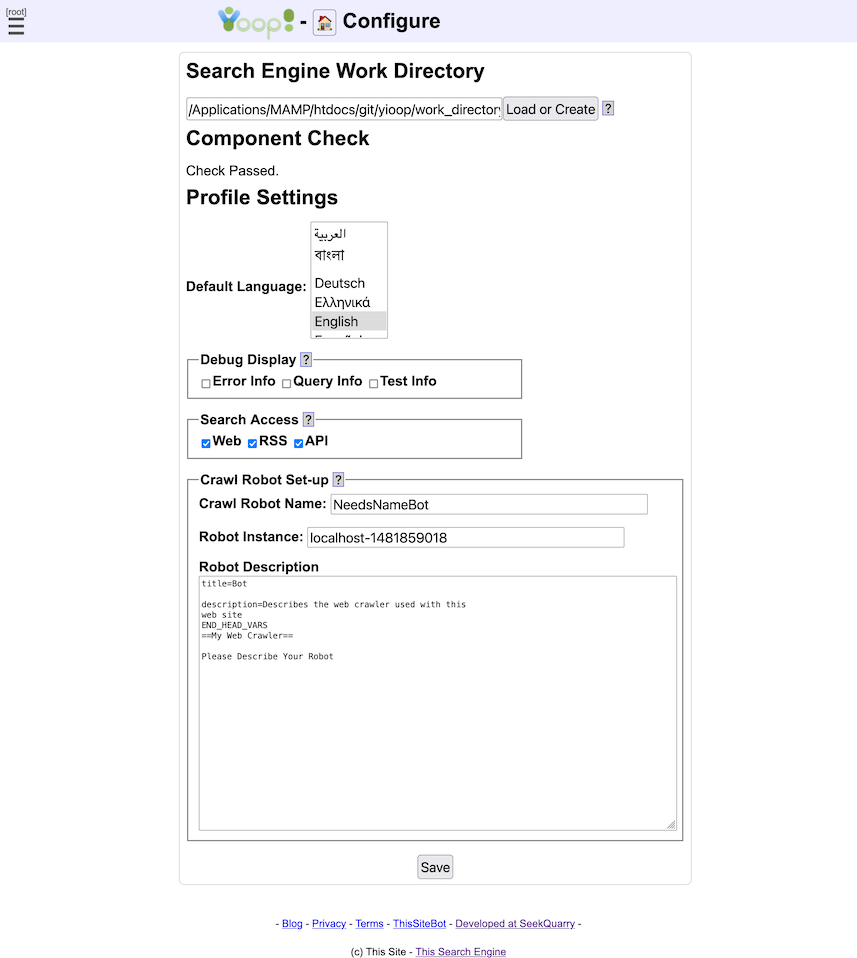

Click the hamburger menu icon to reveals what we will call the activity menu. From the activity menu, click on the Signin link to log on to the administrative panel for Yioop. The default username is root with an empty password. Again, from the activity menu, under the System Settings activity group, click on the Configure Activity . This activity page looks like:

The Search Engine Work Directory form let's you specify the name of a folder where all of the data specific to your copy of Yioop should be stored. This defaults to YIOOP_DIR/work_directory, and you probably don't want to change this, but you can customize its locations to where you want Yioop specific data to be stored. The web server needs permissions to be able to write this folder for Yioop to work. Notice that under the text field in the image above there is a heading "Component Check" and it says "Checks Passed". Yioop does an automatic check to see if it can find all the common built-in classes and functions it needs to run, making sure they have not been disabled. If the Component Check says something other than "Checks passed", you will probably need to edit your php.ini file to get Yioop to be able to do crawls.

In the above Configure Screen image, there is a Profile Settings form beneath the Search Engine Work Directory form. The Profile Settings form allows you to configure the debug, search access, database, queue server, and robot settings.

The Debug Display fieldset has three checkboxes: Error Info, Query Info, and Test Info. Checking Error Info will mean that when the Yioop web app runs, any PHP Errors, Warnings, or Notices will be displayed on web pages. This is useful if you need to do debugging, but should not be set in a production environment. The second checkbox, Query Info, when checked, will cause statistics about the time, etc. of database queries to be displayed at the bottom of each web page. The last checkbox, Test Info, says whether or not to display automated tests of some of the systems library classes, the link next to this (appears only when checked) goes to a page where you can run the tests. None of these debug settings should be checked in a production environment.

The Search Access fieldset has three checkboxes: Web, RSS, and API. These control whether a user can use the web interface to get query results, whether RSS responses to queries are permitted, or whether or not the function based search API is available. Using the Web Search interface and formatting a query url to get an RSS response are describe in the Yioop Search and User Interface section. The Yioop Search Function API is described in the section Embedding Yioop , you can also look in the examples folder at the file SearchApi.php to see an example of how to use it. If you intend to use Yioop in a configuration with multiple queue servers (not fetchers), then the RSS checkbox needs to be checked.

The Crawl Robot Set-up fieldset is used to provide websites that you crawl with information about who is crawling them.

- The field Crawl Robot Name is used to say part of the USER-AGENT. It has the format:

Mozilla/5.0 (compatible; NAME_FROM_THIS_FIELD; YOUR_SITES_URL/bot)

The value set will be common for all fetcher traffic from the same queue server on site when downloading webpages. If you are doing crawls using multiple queue servers you should give the same value to each queue server. The value of YOUR_SITES_URL comes from the Server Settings - Name Server URL field. - The Robot Instance field is used for web communication internal to a single yioop instance to help identify which queue server or fetcher under that queue server was involved. This string should be unique for each queue server in your Yioop set-up. The value of this string is written when logging requests between fetchers and queue servers and can be helpful in debugging.

- The Robot Description field is used to specify the Public bot wiki page. This page can also be accessed and edited under My Groups by clicking on the wiki link for the Public group and then editing its Bot page. This wiki page is what's display when someone goes to the URL:

YOUR_SITES_URL/bot

The point of this page is to give web owners both contact info for your bot as well as a description of how your bot crawls web sites.

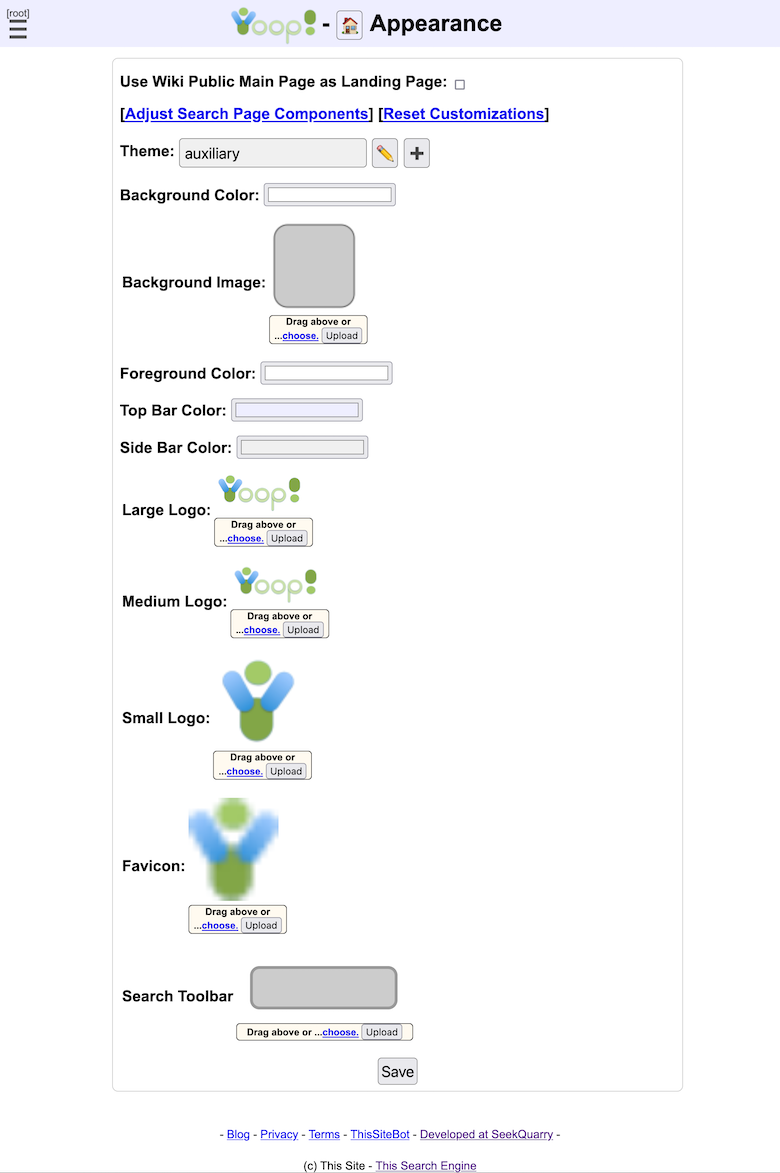

Changing the Global Appearance of Yioop

To change the global appearance of Yioop, under the System Settings activity group on the side of the screen, click on the Appearance . This activity page looks like:

The Use Wiki Public Main Page as Landing Page checkbox lets you set the main page of the Public wiki to be the landing page of the whole Yioop site rather than the default centered search box landing page. The Theme dropdown lets you set an additional CSS (Cascading Style Sheet) theme to apply to the look at feel of the Yioop website. The Pencil icon takes one to a page where the current CSS theme can be edited. The Plus icon can be used to create a new CSS theme.

Several of the text fields in Site Customizations control various colors used in drawing the Yioop interface. These include Background Color , Foreground Color , Top Bar Color , Side Bar Color . The values of these fields can be any legitimate style-sheet color such as a # followed by an red, green, blue value (between 0-9 A-F), or a color word such as: yellow, cyan, etc. If you would like to use a background image, you can either use the picker link or drag and drop one into the rounded square next to the Background Image label. Various other images such as the Site Logo , Mobile Logo (the logo used for mobile devices), and Favicon (the little logo that appears in the title tab of a page or in the url bar) can similarly be chosen or dragged-and-dropped.

A Search Toolbar is a short file that can be used to add your search engine to the search bar of a browser. You can drag such a file into the gray area next to this label and click save to set this for your site. The link to install the search bar is visible on the Settings page. There is also a link tag on every page of the Yioop site that allows a browser to auto-discover this as well. As a starting point, one can try tweaking the default Yioop search bar, yioopbar.xml, in the base folder of Yioop.

Optional Server and Security Configurations

The configuration and appearance activities just described suffices to set up Yioop for a single server crawl and do simple customization on how your Yioop site looks. If that is all that you are interested in, you may want to skip ahead to the section on the Yioop Search Interface to learn about the different search features available in Yioop or you may want to skip ahead to Performing and Managing Crawls to learn about how to perform a crawl. In this section, we describe the Server Settings and Security activities which might be useful in a multi-machine, multi-user setting, and which might also be useful for crawling hidden websites or crawling through proxies. We also describe the form used to configure advertisements in Yioop.

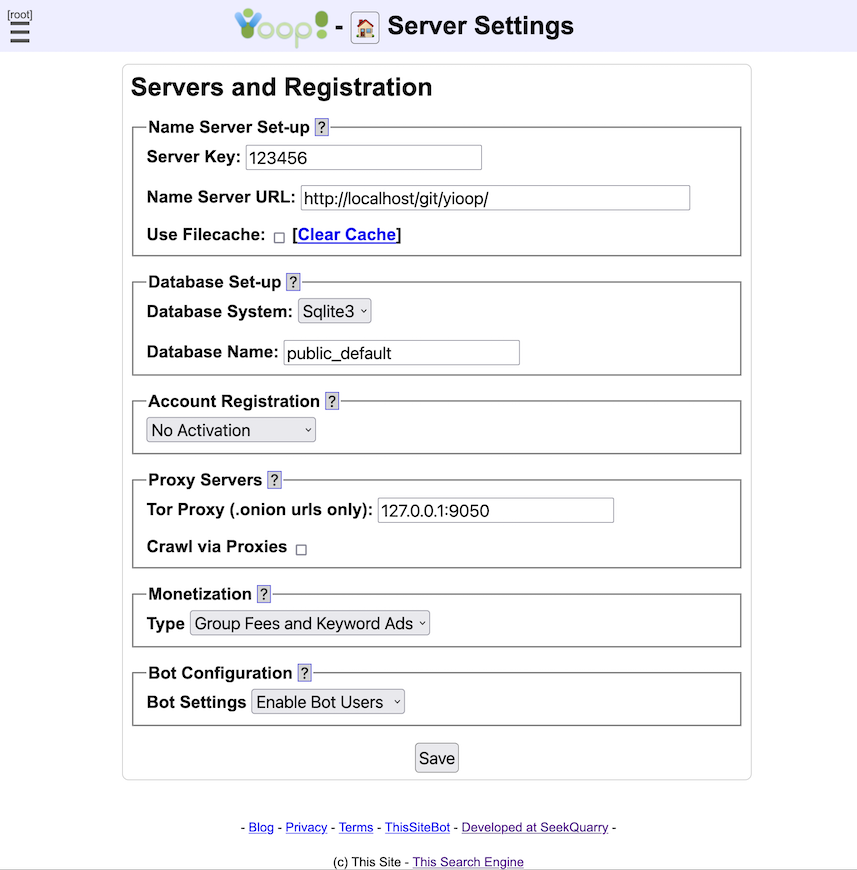

The Server Settings activity looks like:

The Name Server Set-up fieldset is used to tell Yioop which machine is going to act as a name server during a crawl and what secret string to use to make sure that communication is being done between legitimate queue servers and fetchers of your installation. If you are running Yioop as its own server, this fieldset will also contain a link to restart the server, which is useful to make configuration changes take effect in the Yioop-as-server setting. In terms of the configuration settings of this fieldset, you can choose anything for your secret string as long as you use the same string amongst all of the machines in your Yioop installation. The reason why you have to set the name server url is that each machine that is going to run a fetcher to download web pages needs to know who the queue servers are so they can request a batch of urls to download. There are a few different ways this can be set-up:

- If the particular instance of Yioop is only being used to display search results from crawls that you have already done, then this fieldset can be filled in however you want.

- If you are doing crawling on only one machine, you can put http://localhost/path_to_yioop/ or http://127.0.0.1/path_to_yioop/, where you appropriately modify "path_to_yioop".

- Otherwise, if you are doing a crawl on multiple machines, use the url of Yioop on the machine that will act as the name server.

In communicating between the fetcher and the server, Yioop uses curl. Curl can be particular about redirects in the case where posted data is involved. i.e., if a redirect happens, it does not send posted data to the redirected site. For this reason, Yioop insists on a trailing slash on your queue server url. Beneath the Queue Server Url field is a Filecache checkbox. Sometimes it is useful to be able, while testing queries, to remove what is currently cached, so that one can see new results that were just added, the Clear Cache link let's you do this. The Clear Cache link clears both the file cache as well as the local domain-name-to-IP-address cache.

The Database Set-up fieldset is used to specify what database management system should be used by Yioop, how it should be connected to, and what user name and password should be used for the connection. At present PDO (PHP's generic DBMS interface), sqlite3, and Mysql databases are supported. Th demo site yioop.com uses the PDO interface to connect to a Postgres database. For a Yioop system, the database is used to store information about what users are allowed to use the admin panel and what activities and roles these users have. Unlike many database systems, if a sqlite3 database is being used then the connection is always a file on the current filesystem and there is no notion of login and password, so in this case only the name of the database is asked for. For sqlite, the database is stored in WORK_DIRECTORY/data. For single user settings with a limited number of news feeds, sqlite is probably the most convenient database system to use with Yioop. If you think you are going to make use of Yioop's social functionality and have many users, feeds, and crawl mixes, using a system like Mysql or Postgres might be more appropriate.

If you would like to use a different DBMS than Sqlite or Mysql, then the easiest way is to select PDO as the Database System and for the Hostname given use the Data Source Name (DSN) with the appropriate DBMS driver. For example, for Postgres one might have something like:

pgsql:host=localhost;port=5432;dbname=test;user=bruce;password=mypassYou can put the username and password either in the DSN or in the Username and Password fields. The database name field must be filled in with the name of the database you want to connect to. It is also include needs to be included in the dsn, as in the above. PDO and Yioop has been tested to work with Postgres and sqlite, for other DBMS's it might take some tinkering to get things to work.

When switching database information, Yioop checks first if a usable database with the user supplied data exists. If it does, then it uses it; otherwise, it tries to create a new database. Yioop comes with a small sqlite demo database (public_default.db ) in the src/data directory and this is used to populate the installation database in this case. This database has one account root with no password which has privileges on all activities. Since different databases associated with a Yioop installation might have different user accounts set-up after changing database information you might have to sign in again. Also, in src/data then is another small sqlite3 database, private_default.db which is used when users want to have encrypted Groups, as opposed to storing groups in the database in plain text.

The Account Registration fieldset is used to control how users obtain accounts for a Yioop installation. The dropdown at the start of this fieldset allows one to select one of four possibilities: Disable Registration, users cannot register themselves, only the root account can add users; No Activation, user accounts are immediately activated once a user signs up; Email Activation, after registering, users must click on a link which comes in a separate email to activate their accounts; and Admin Activation, after registering, an admin account must activate the user before the user is allowed to use their account. When Disable Registration is selected, the Suggest A Url form and link on the tool.php page are disabled as well, for all other registration type this link is enabled. If Email Activation is chosen, then the reset of this fieldset can be used to specify the email address that the email comes to the user. The Send Mail From Media Updater checkbox controls whether emails are sent immediately from the web app or if they are queued, and then sent out by the Media Updater process/processes. The checkbox Use PHP mail() function controls whether to use the mail() function in PHP to send the mail. This only works if mail can be sent from the local machine. Alternatively, if this is not checked, one can configure an outgoing SMTP server to send the email through.

The Proxy Server fieldset is used to control which proxies to use while crawling. By default Yioop does not use any proxies while crawling. A Tor Proxy can serve as a gateway to the Tor Network. Yioop can use this proxy to download .onion URLs on the Tor network. The configuration given in the example above works with the Tor Proxy that comes with the

Tor Browser. Obviously, this proxy needs to be running though for Yioop to make use of it. A more robust apporach than using the Tor Browser to relay, is to install the tor relay executable. From the command line you can install this using brew (MacOS), apt (Ubuntu Linux), or choco (Windows) using a command like:

brew install torThen use the setting 127.0.0.1:9050 in Yioop. Beneath the Tor Proxy input field is a checkbox labelled Crawl via Proxies. Checking this box, will reveal a textarea labelled Proxy Servers. You can enter the address:port, address:port:proxytype, or address:port:proxytype:username:password of the proxy servers you would like to crawl through. If proxy servers are used, Yioop will make any requests to download pages to a randomly chosen server on the list which will proxy the request to the site which has the page to download. To some degree this can make the download site think the request is coming from a different ip (and potentially location) than it actually is. In practice, servers can often use HTTP headers to guess that a proxy is being used.

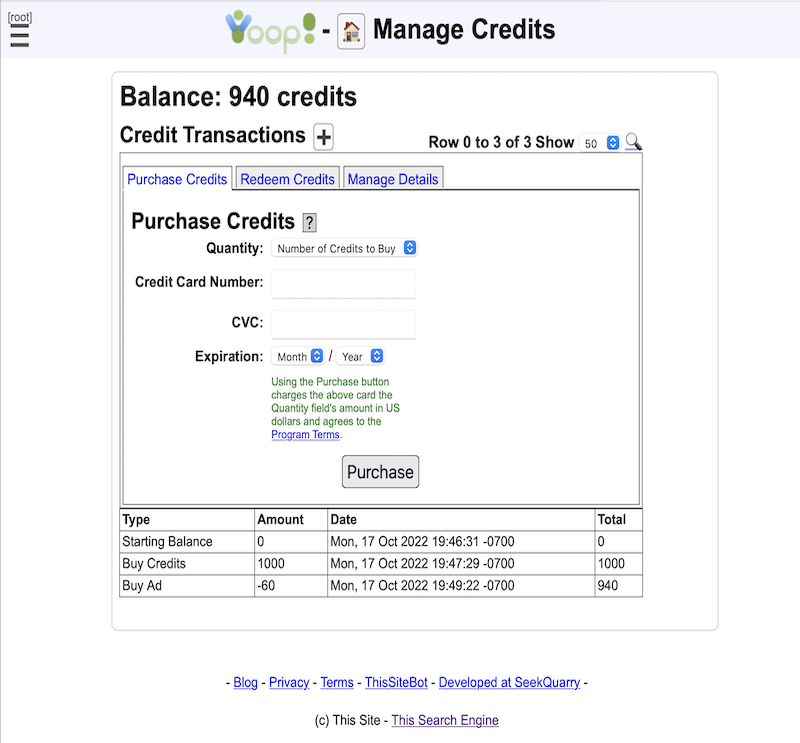

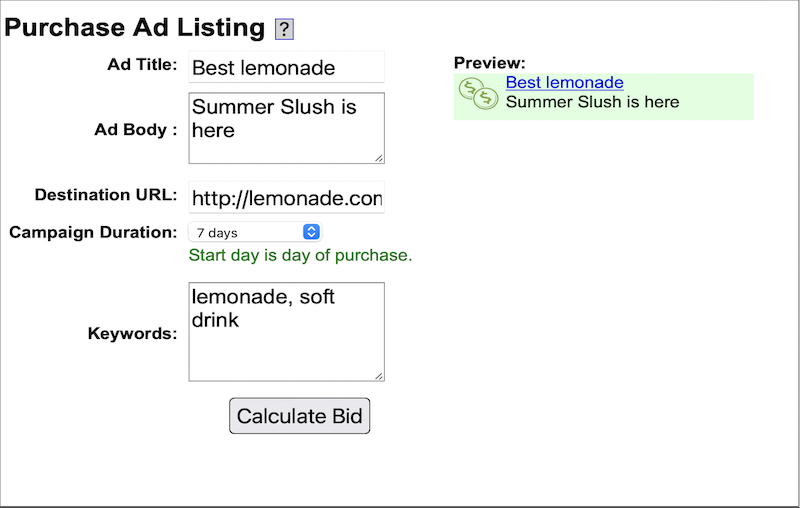

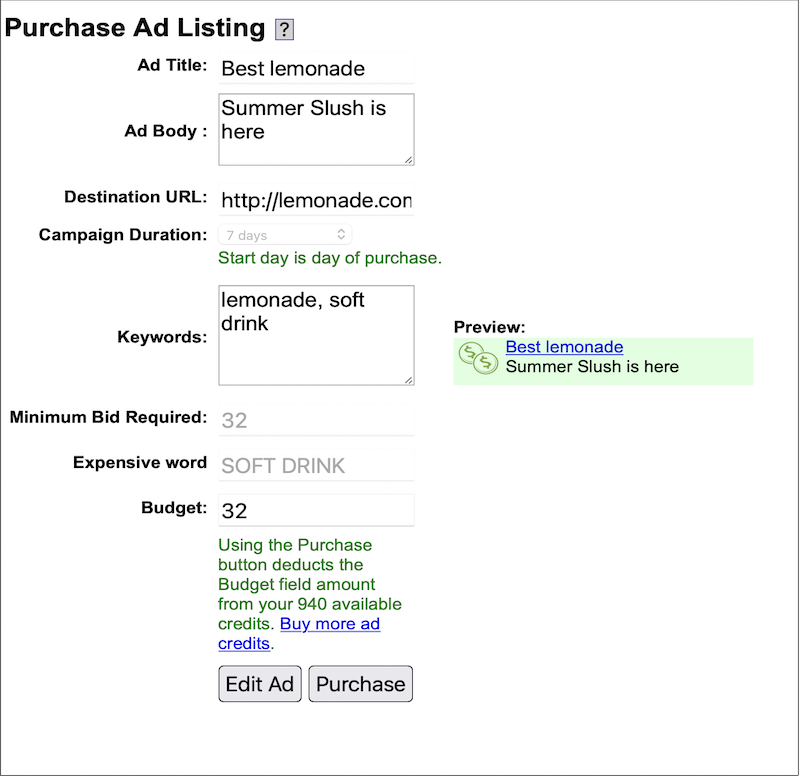

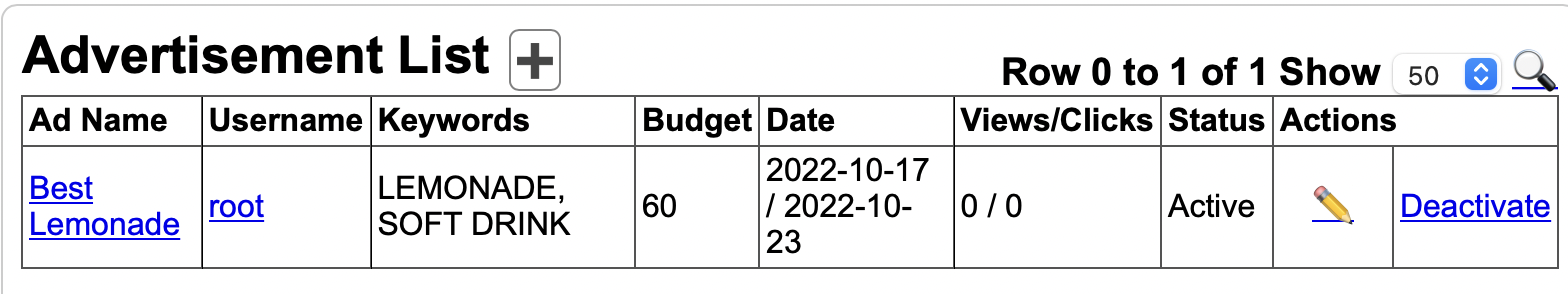

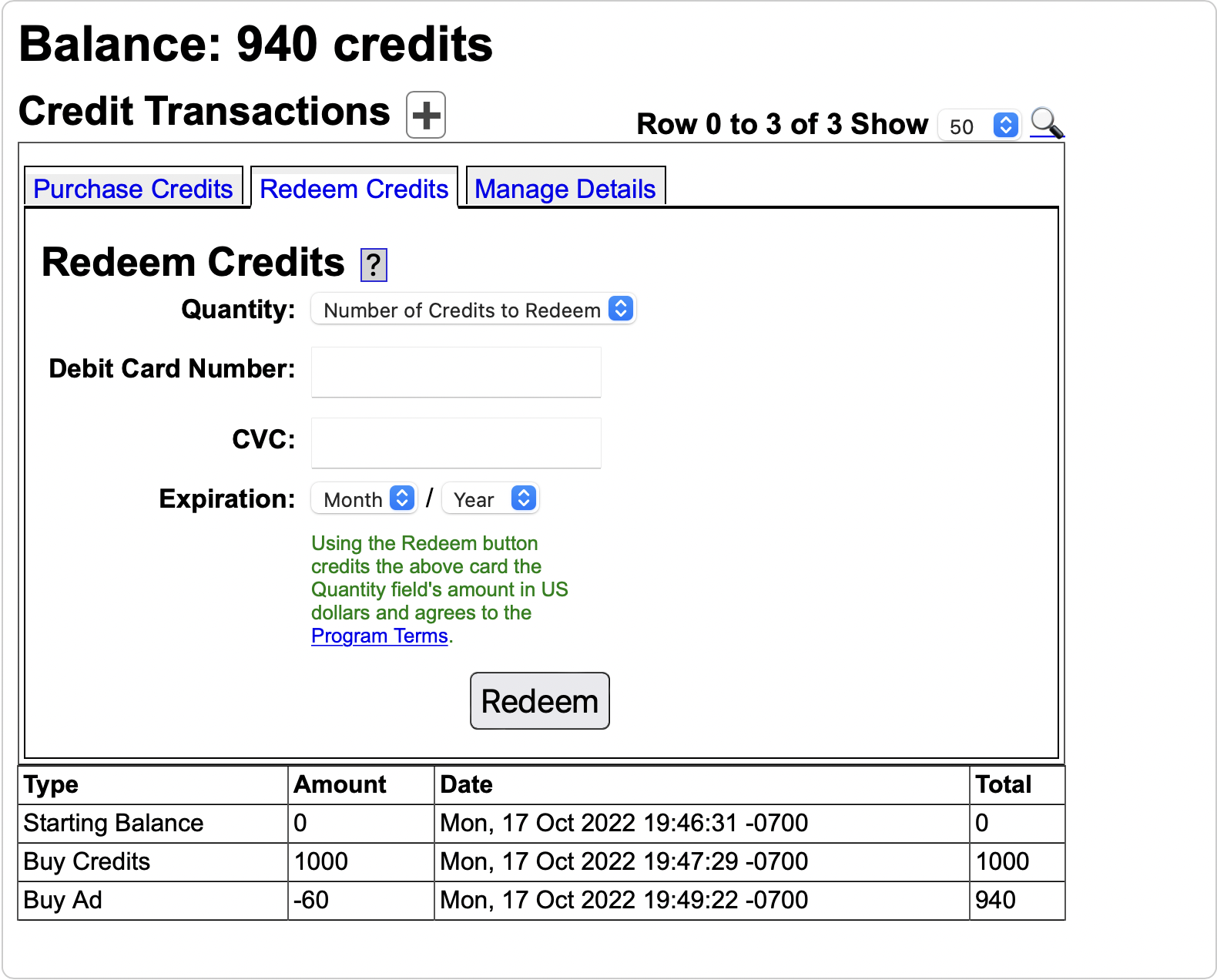

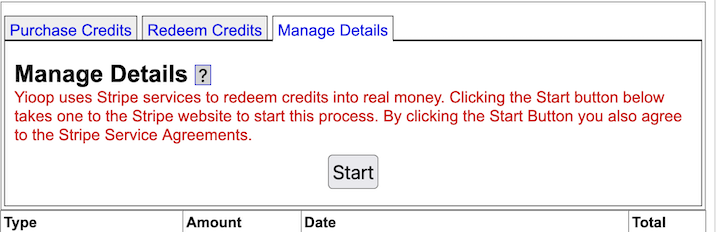

The Monetization fieldset is used to specify whether advertising will be served with Yioop search results, and if it is, what kind. It also controls whether or not user's can charge credit fees for groups. The Type dropdown controls this, allowing the administrator to choose between None , External Ad Server , Group Fees , Keyword Advertisements , and Group Fees and Keyword Ads . If Group Fees or Group Fees and Keyword Ads are chosen then when a user creates a new group they can select for a registration type that a certain number of credits be charged. Yioop's built-in mechanism for keyword advertising is described in the Keyword Advertising section and is activated if either Keyword Advertisements or Group Fees and Keyword Ads is selected. External Ad Server refers to advertising scripts (such as Google Ad Words, Bidvertiser, Adspeed, etc) which are to be added on search result pages or on discussion thread pages. There are four possible placements of ads: None -- don't display advertising at all, Top -- display banner ads beneath the search bar but above search results, Side -- display skyscraper Ads in a column beside the search results, and Both -- display both banner and skyscraper ads. Choosing any option other than None reveals text areas where one can insert the Javascript one would get from the Ad network. The Global Ad Script text area is used for any Javascript or HTML the Ad provider wants you to include in the HTML head tag for the web page (many advertisers don't need this).

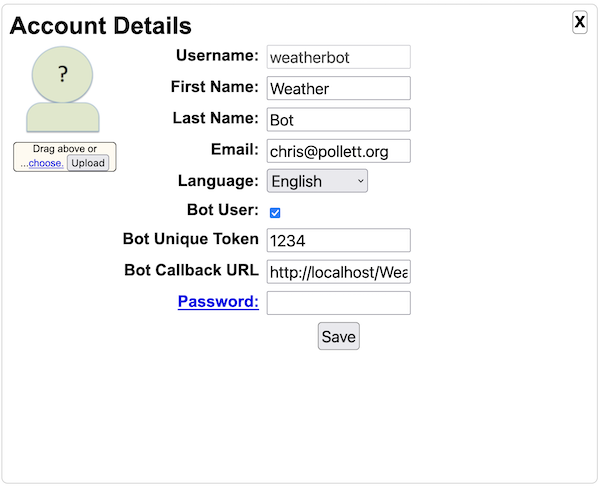

The Bot Configuration field-set is used to control whether users of this Yioop instance can be chat bots. If enabled under Account Home a Yioop user can declare themselves a chat bot. Such user's can be programmed to give automated responses to group posts using the Chat Bot Interface .

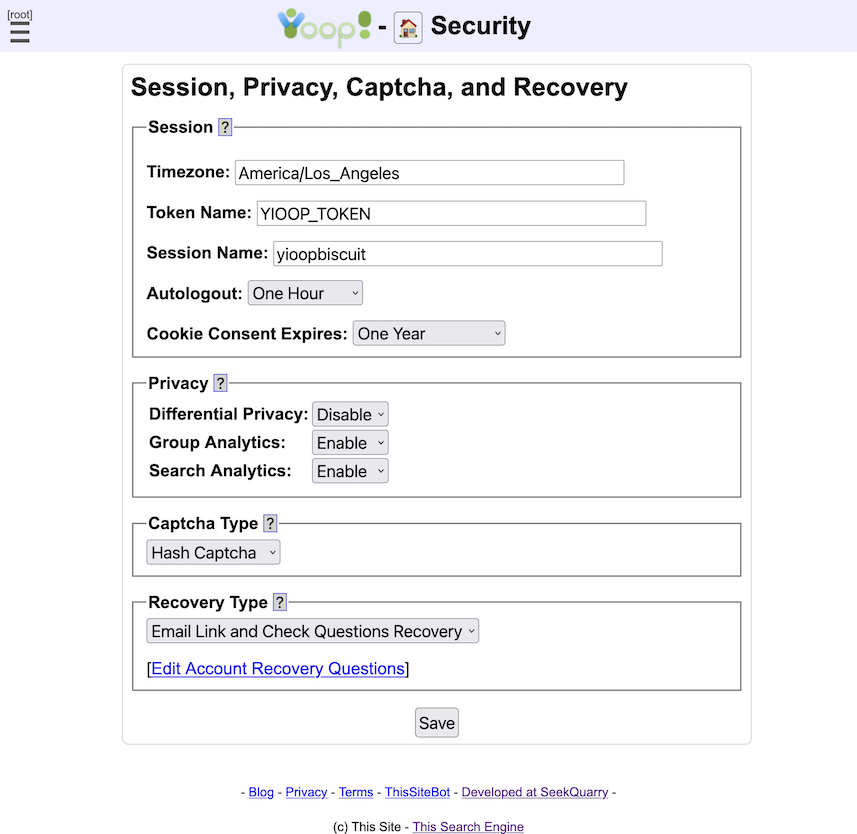

The Security activity looks like:

The Session fieldset is used to control how a user session in Yioop behaves. The Timezone field controls the timezone used for dating posts and other events once a user is logged in. The Token Name field controls the name of the token variable which appears in URLs that is used in conjunction with cookies to determine if a user is logged. It is there to prevent cross-site request forgery attacks on a Yioop website. The Session Name field controls the name of the cookie that will be stored in a user's browser to maintain a session once logged into Yioop. The Autologout dropdown specifies how long a session can be inactive before a user will be logged out. The Cookie Consent Expires field controls how long session cookies last before the message that a user needs to accept cookies to use the site fully appears. This message might be needed in some jurisdictions because of laws like GDPR. The way this message is currently implemented in Yioop prevents the setting of any cookies in a user's browser until the consent message button is clicked.

The Privacy Fieldset controls a variety of options with respect to how analytics from individual users is collected by a Yioop instance. Differential Privacy controls whether group and thread view statistics are displayed under the Account Home and My Groups activities are fuzzified to try to prevent individual users from being identifiable by change in counts. Group Analytics controls whether information about group and thread views is collected and whether statistics about these views are visible to group owners. If this is disabled, it does not delete statistics that were previously collected, however, they will no longer be viewable and no future views will be recorded. Search Analytics controls whether information about search queries is collected and aggregated. If this is disabled, it does not delete statistics that were previously collected, however, they will no longer be viewable and no future collection will occur. Also, if this is disabled, but keyword advertisements are enabled, then impressions with respect to advertised keywords will still be collected.

The Captcha Type fieldset controls what kind of captcha will be used during account registration, password recovery, and if a user wants to suggest a url. The captcha type only has an effect if under the Server Settings activity, Account Registration is not set to Disable Registration. The choices for captcha are: Graphic Captcha , the user needs to enter a sequence of characters from a distorted image; and Hash Captcha , the user's browser (the user doesn't need to do anything) needs to extend a random string with additional characters to get a string whose hash begins with a certain lead set of characters. Of these, Hash Captcha is probably the least intrusive but requires Javascript and might run slowly on older browsers. The graphic captcha is probably the one people are most familiar with.

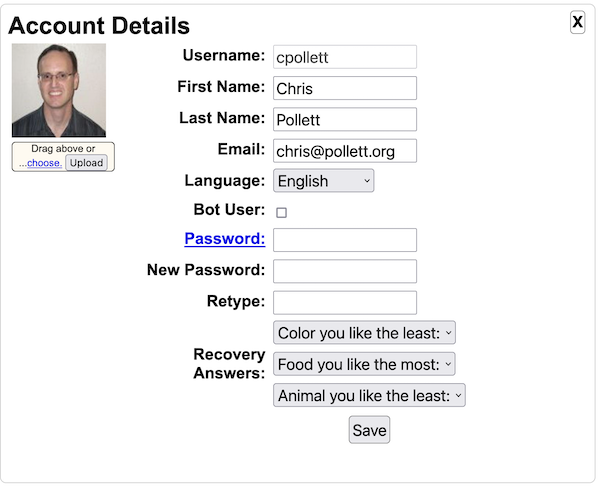

The Recovery Type fieldset controls the recovery option used when a user forgets their password. The choices are: No Password Recovery Link , in which case there is no automated option for recovering one's password; Email Link Password Recovery , in which case a link is sent to a user's email and clicking on that links allows the user to change their password; and Email Link and Check Questions Recovery , which is the same as Email Link Password Recovery except that to change the password the user must enter the answers to their recovery questions correctly.

The Edit Account Recovery Questions] link of the Recovery Type fieldset takes one to a filtered view of the Localization activity where one can edit the the Recovery Questions for the current locale (you can change the current locale in Settings). There are a fixed list of tests question slots you can localize. A single test consists of a more question, a less question, and a comma separate list of possibilities. For example,

Animal you like the best? Animal you like the least? ant,bunny,cat,cockroach,dog,goldfish,hamster,horse,snake,spider,tiger,whaleWhen challenging a user, Yioop picks a subset of tests. For each test, it randomly chooses between more or less question. It then picks a subset of the ordered list of choices, randomly permutes them, and presents them to the user in a dropdown.

Yioop's captcha-ing system tries to prevent attacks where a machine quickly tries several possible answers to a captcha. Yioop has a IP address based timeout system (implemented in models/visitor_model.php). Initially a timeout of one second between requests involving a captcha is in place. An error screen shows up if multiple requests from the same IP address for a captcha page are made within the time out period. Every mistaken entry of a captcha doubles this timeout period. The timeout period for an IP address is reset on a daily basis back to one second.

Upgrading Yioop

If you have an older version of Yioop that you would like to upgrade, make sure to back up your data. Yioop stores data in two places: a database, the details of which can be found by looking at the Server Settings activity; and in a WORK_DIRECTORY folder, the path to this folder can be found in the Configure activity, Search Engine Work Directory form. Once your back up is done, how you should upgrade depends on how you installed Yioop in the first place. We give three techniques below, the first of which will always work, but might be slightly more complicated than the other two.

If you originally installed Yioop from the download page, download the latest version of Yioop. Extract the src subfolder of the zip file you get and replace the current src folder of the installation you have. If you had a file src/configs/LocalConfig.php, in your old installation make sure to copy this file to the upgrade. Make sure Yioop has write permissions on src/configs/Configs.php. Yioop should be then able to complete the upgrade process. If it doesn't look like your old work directory data is showing up, please check the Configure activity, Search Engine Work Directory form and make sure it has the same value as before the upgrade.

If you have been getting Yioop directly from the git repository, then to upgrade Yioop you can just issue the command:

git pullfrom a command prompt after you've switched into the Yioop directory.

If you are using Yioop as part of a Composer project, then issue the command:

composer updateshould upgrade Yioop to the most recent version compatible with your project.

Summary of Files and Folders

The Yioop search engine consists of three main scripts:

- src/executables/Fetcher.php

- Used to download batches of urls provided the queue server.

- src/executables/QueueServer.php

- Maintains a queue of urls that are going to be scheduled to be seen. It also keeps track of what has been seen and robots.txt info. Its last responsibility is to create the index_archive that is used by the search front end.

- index.php

- Acts as the search engine web page. It is also used to handle message passing between the fetchers (multiple machines can act as fetchers) and the queue server. Finally, when Yioop is run as its own web server, this file launches and initializes the web server.

The file index.php is used when you browse to an installation of a Yioop website. The description of how to use a Yioop web site is given in the sections starting from the The Yioop User Interface section. The files Fetcher.php and QueueServer.php are only connected with crawling the web. If one already has a stored crawl of the web, then you no longer need to run or use these programs. For instance, you might obtain a crawl of the web on your home machine and upload the crawl to a an instance of Yioop on the ISP hosting your website. This website could serve search results without making use of either Fetcher.php or QueueServer.php. To perform a web crawl you need to use both of these programs as well as the Yioop web site. This is explained in detail in the section on Performing and Managing Crawls .

The Yioop folder itself consists of several files and sub-folders. The file index.php as mentioned above is the main entry point into the Yioop web application. There is also an .htaccess file that is used to route most requests to the Yioop folder to go through this index.php file. If you look at the top folder structure of Yioop it has three main subfolders: src , work_directory , and tests . If you are developing a Composer project with Yioop there might also be a vendor folder which contains a class autoloader for the PHP classes used in your project. The src folder contains the source code for Yioop. The work_directory is the default location where Yioop stores indexes as well as where you can put any site specific customizations. As the location of where Yioop stores stuff is customizable, we will write WORK_DIRECTORY in caps to refer to the location of this folder in the current instance of Yioop. Finally, tests is a folder of unit tests for various Yioop classes. We now describe the major files and folders in each of these folders in a little more detail.

The src folder contains several useful files as well as additional sub-folders. yioopbar.xml is the xml file specifying how to access Yioop as an Open Search Plugin. favicon.ico is used to display the little icon in the url bar of a browser when someone browses to the Yioop site. The other files (as opposed to folders), in src are used only if url rewrites are turned off and provide access to a wiki page or feed with a related name. For example, blog.php can serve the site wide blog if redirects are off; privacy.php, the privacy policy; terms.php, the terms of service, etc.

We next look at what the src folder's various sub-folders contain:

- configs

- This folder contains configuration files. You will probably not need to edit any of these files directly as you can set the most common configuration settings from with the admin panel of Yioop. The file Config.php controls a number of parameters about how data is stored, how, and how often, the queue server and fetchers communicate, and which file types are supported by Yioop. ConfigureTool.php is a command-line tool which can perform some of the configurations needed to get a Yioop installation running. It is only necessary in some virtual private server settings -- the preferred way to configure Yioop is through the web interface. Createdb.php can be used to create a bare instance of the Yioop database with a root admin user having no password. This script is not strictly necessary as the database should be creatable via the Admin panel; however, it can be useful if the database isn't working for some reason. Createdb.php includes the file PublicHelpPages.php from WORK_DIRECTORY/app/configs if present or from BASE_DIR/configs if not. This file contains the initial rows for the Public and Help group wikis. When upgrading, it is useful to export the changes you have made to these wikis to WORK_DIRECTORY/app/configs/PublicHelpPages.php . This can be done by running the file ExportPublicHelpDb.php which is in the configs folder. Also, in the configs folder is the file default_crawl.ini . This file is copied to WORK_DIRECTORY after you set this folder in the admin/configure panel. There it is renamed as crawl.ini and serves as the initial set of sites to crawl until you decide to change these. The file GroupWikiTool.php can be used to determine where the resources for a wiki page are stored, as well as to do simple manipulations on the way the resources are archived when new versions of a wiki page are made. Running it with no command line arguments gives a description of how it works. The file TokenTool.php is a tool which can be used to help in term extraction during crawls and for making trie's which can be used for word suggestions for a locale. To help word extraction this tool can generate in a locale folder (see below) a word bloom filter. This filter can be used to segment strings into words for languages such as Chinese that don't use spaces to separate words in sentences. For trie and segmenter filter construction, this tool can use a file that lists words one on a line. TokenTool.php can also be used with Yandex translate to translate locale strings, Public and Help wiki pages from the English locale to other locales.

- controllers

- The controllers folder contains all the controller classes used by the web component of the Yioop search engine. Most requests coming into Yioop go through the top level index.php file. The query string (the component of the url after the ?) then says who is responsible for handling the request. In this query string there is a part which reads c= ... This says which controller should be used. The controller uses the rest of the query string such as the a= variable for activity function to call and the arg= variable to determine which data must be retrieved from which models, and finally which view with what elements on it should be displayed back to the user. Within the controller folder is a sub-folder components, a component is a collection of activities which may be added to a controller so that it can handle a request.

- css

- This folder contains the stylesheets used to control how web page tags should look for the Yioop site when rendered in a browser.

- data

- This folder contains the default sqlite3 databases (public_default.db and private_default.db) for a new Yioop installation. Whenever the WORK_DIRECTORY is changed, it is these databases which are initially copied into the WORK_DIRECTORY to serve as the databases of allowed users for the Yioop system.

- examples

- This folder contains files QueryCacher.php , SearchApi.php , StockBot.php , and WeatherBot.php . QueryCacher.php gives an example of how to write a script that executes a sequence of queries against a Yioop index. This could be useful for caching queries to improve query performance. SearchApi.php gives an example of how to use the Yioop search function api. Finally, StockBot.php and WeatherBot.php give examples of how to code a Yioop Chat Bot.

- executables